A Big Week in Medical A.I.

Multiple new reports are indicators for where we are headed

While every week there’s a bunch of new studies reported in life science and medical A.I., this one stands out for there being quite a rich and diverse cluster, both positive and negative, providing new insights.

Polaris

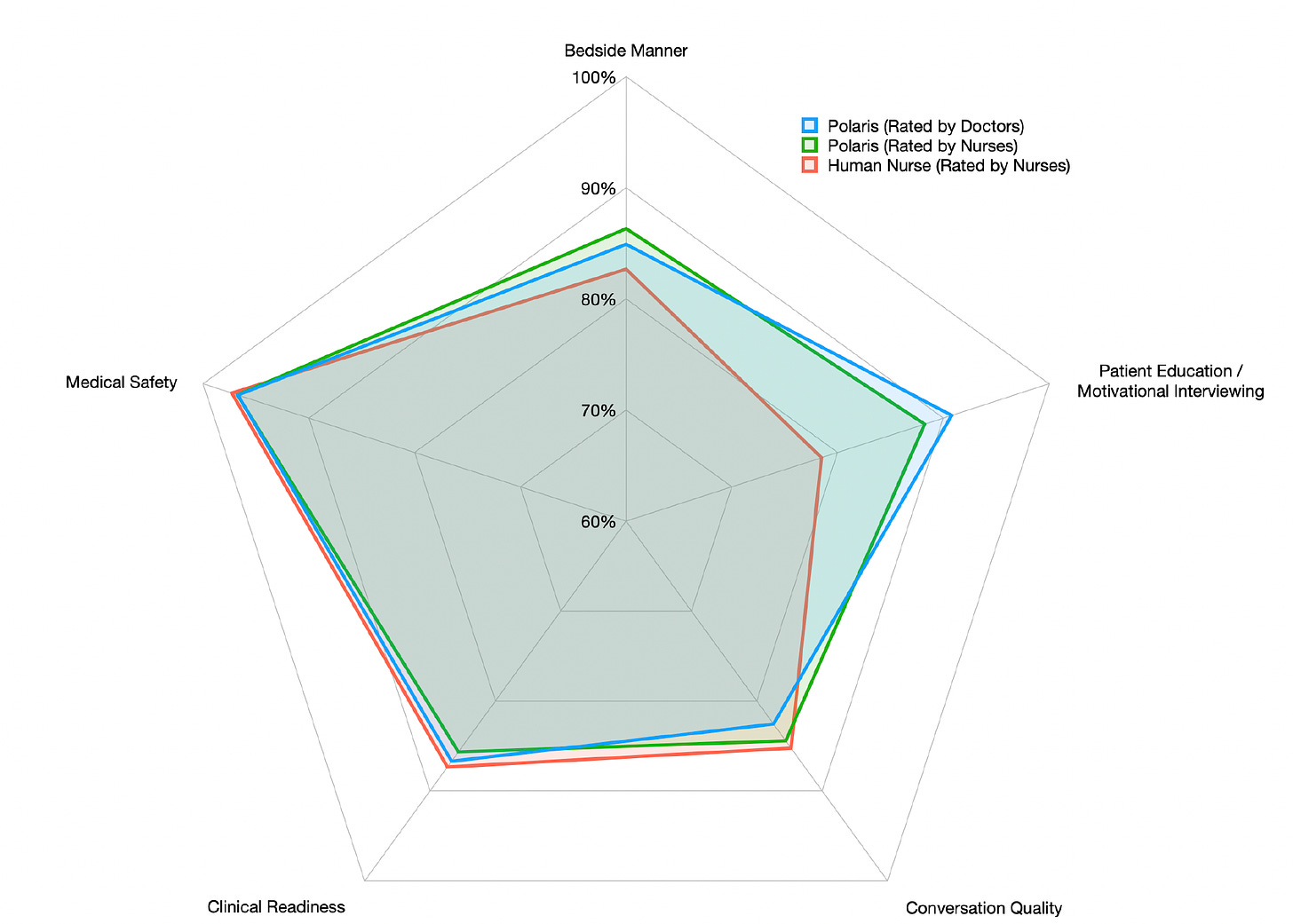

The week started at the Nvidia GTC conference, which was packed with presentations and panels on biomedical A.I. Jensen Huang, Nvidia’s CEO, presented a 2-hour keynote on Monday that briefly spotlighted the work at Hippocratic AI, which published a preprint on what they call Polaris, “the first safety-focused large language model constellation for real-time patient-AI healthcare conversations.” Hippocratic AI recruited 1,100 nurses and 130 physicians to engage their >1 trillion parameter LLM for simulated patient actor conversations, often exceeding 20 minutes. As you can see in the Figure below, Polaris performance, rated by nurses, was as good or better for each of the 5 parameters accessed.

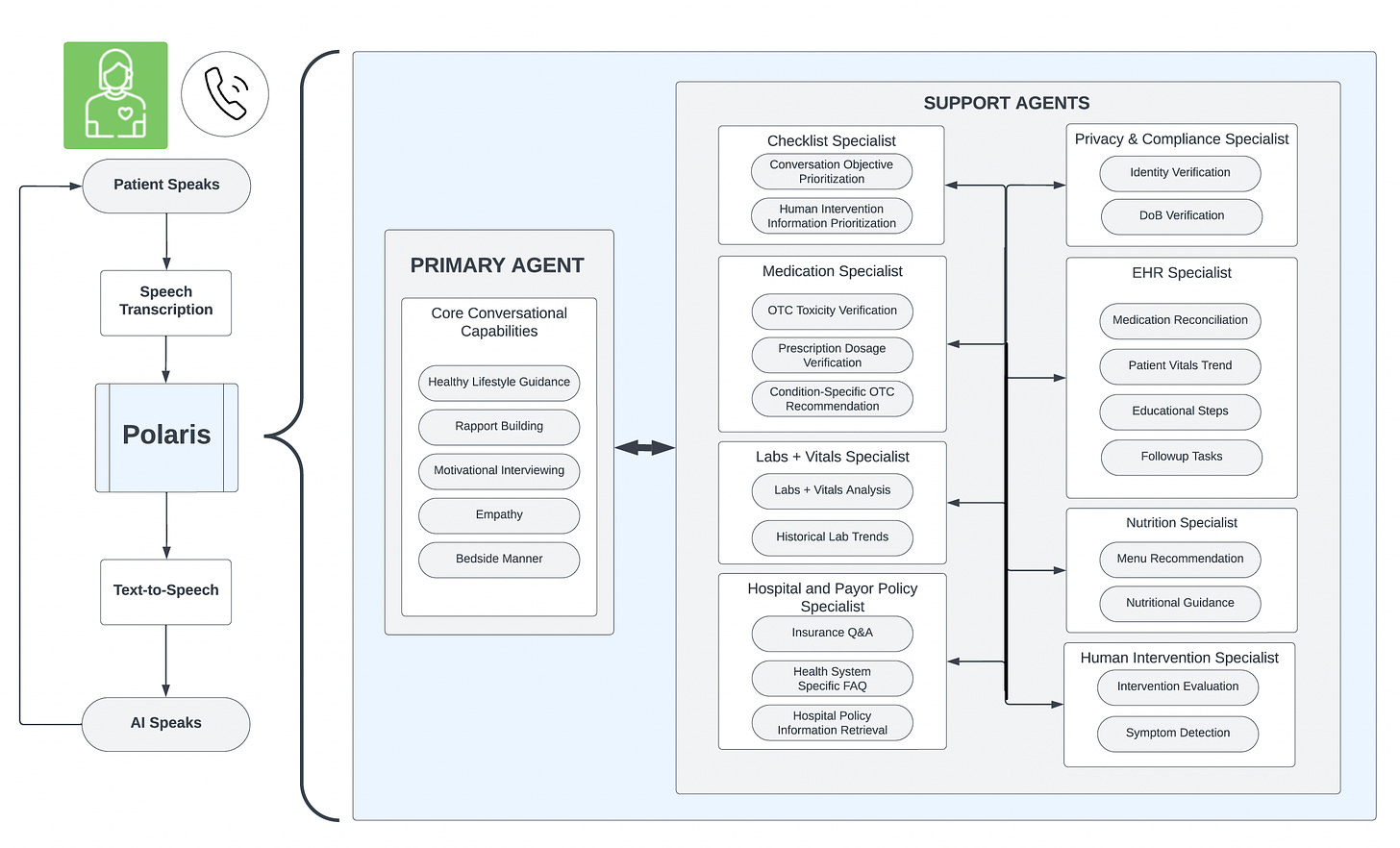

The architecture of Polaris is very different than GPT-4, LLaMA, and other large language models because it consists of multiple domain-specific agents to support the primary agent that is trained for nurse-like conversations, with natural language processing patient speech recognition and a digital human avatar face communicating to the patient. The support agents, with a range from 50 to 100 billion parameters, provide a knowledge resource for labs, medications, nutrition, electronic health records, checklist, privacy and compliance, hospital and payor policy, and need for bringing in a human-in-the-loop.

Having these domain-specific support agents led to improved performance over GPT-4 and LLaMA-2 for medical accuracy and safety. Hippocratic AI is moving forward to test their Polaris model at much larger scale, emphasizing safety.

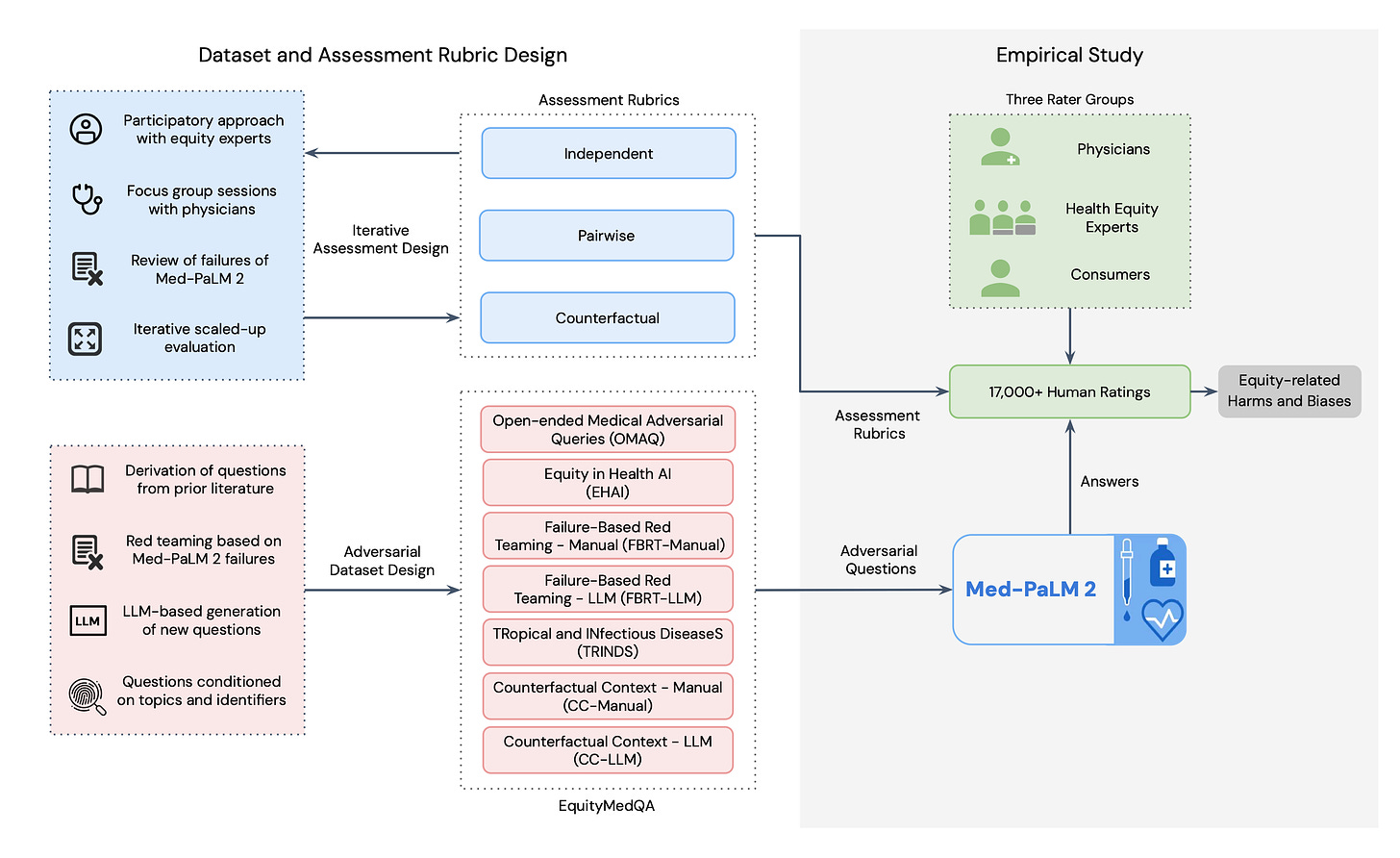

Concurrently, Google AI published a preprint for tackling LLM biases, promoting health equity and safety (design below). It is refreshing to see both these new reports that are addressing vital issues that have been previously under-emphasized.

I was on an invigorating panel at GTC with Peter Lee of Microsoft, Cathie Wood of ARK, moderated by Kimberly Powell, VP of Healthcare and Life Sciences at Nvidia. The archive of the panel hasn’t been posted yet, but I’ll add it when it’s available. Just a footnote to that: I also presented at the last live in-person GTC event held in 2019. What a difference 5 years makes! At that time, there was little interest in medical A.I. and it was circumscribed to medical imaging.

New, Desperately Needed Antibiotics

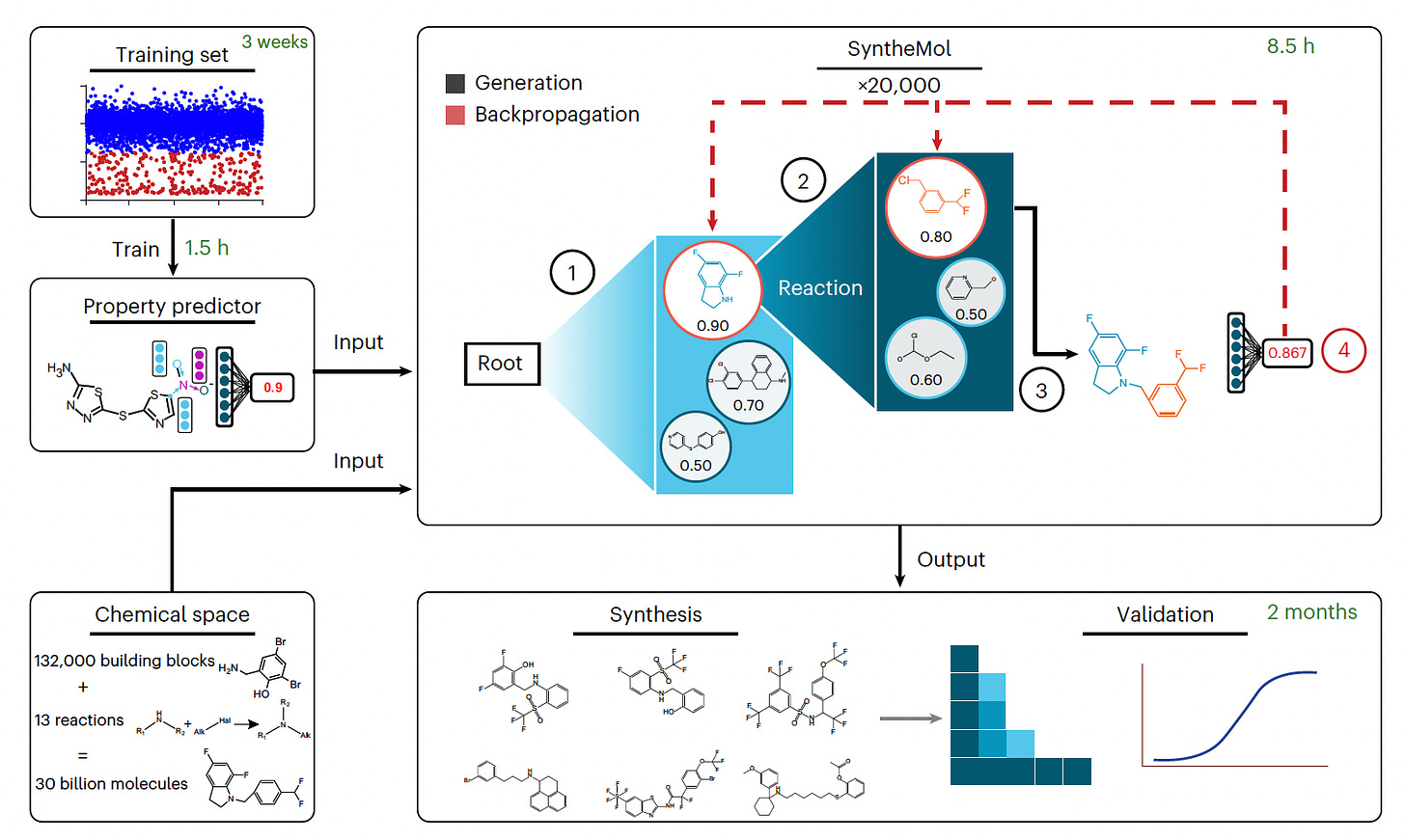

Following Jim Collins and colleagues A.I.-enabled recent discovery of the first new class of structural antibiotics in decades, with multiple potent methicillin-resistant Staph aureus compounds (as reviewed in our recent podcast), this week Jonathan Stokes and collaborators published SyntheMol. It’s a generative A.I. model that was used to respond to WHO’s number one priority for antibiotics—one to treat Acinetobacter baumannii, a gram negative bacteria opportunistic pathogen, with infections usually occurring in healthcare settings, typically resistant to all antibiotics.

SyntheMol was built specifically to design small molecules that are easily (and cheaply) synthesized. From EnamineLtd 30 billion molecules, about 13,500 were screened, 58 promising candidates were synthesized, and 6 were highly potent against A. baumannii.

As you well know, the pharma industry has largely abandoned drug discovery antibiotic programs, but these new reports highlight that academic labs, using transformers A.I. models, can rapidly discover very potent new antibiotics to take into clinical trials.

Pathology LLMs

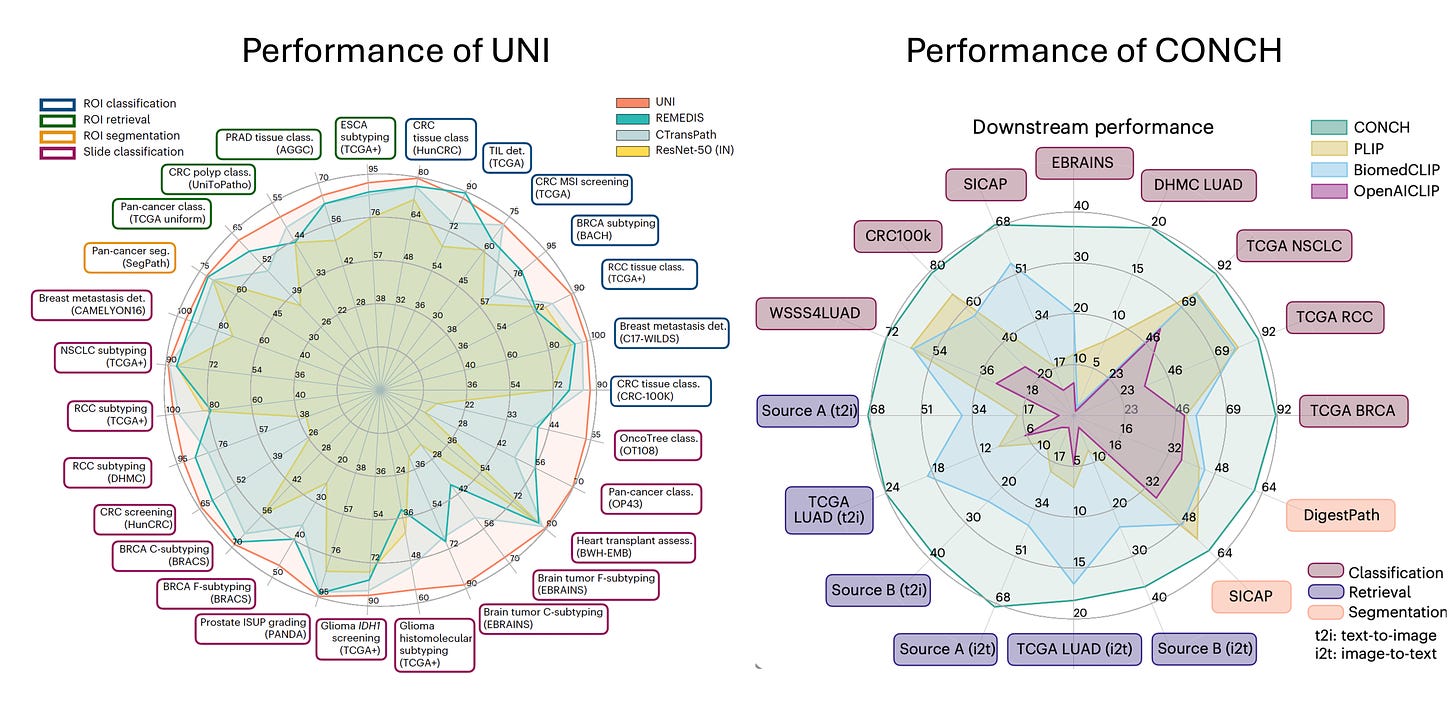

Faisal Mahmood and his colleagues at Harvard Med published 2 impressive foundation model pathology papers this week. With self-supervised A.I., UNI, pre-trained from >100,000 whole slide pathology images (comprised of >100 million images) for 20 major tissue types, exceeded performance of previous state-of-the-art pathology models for 34 computational pathology tasks, as shown on the left panel below. This is a nice step forward for a general-purpose pathology unimodal model.

The second report was on Captions for Histopathology (CONCH), a visual-language foundation model based on well over 1 million image and text pairs. Its high performance for many clinical relevant tasks is shown below at right.

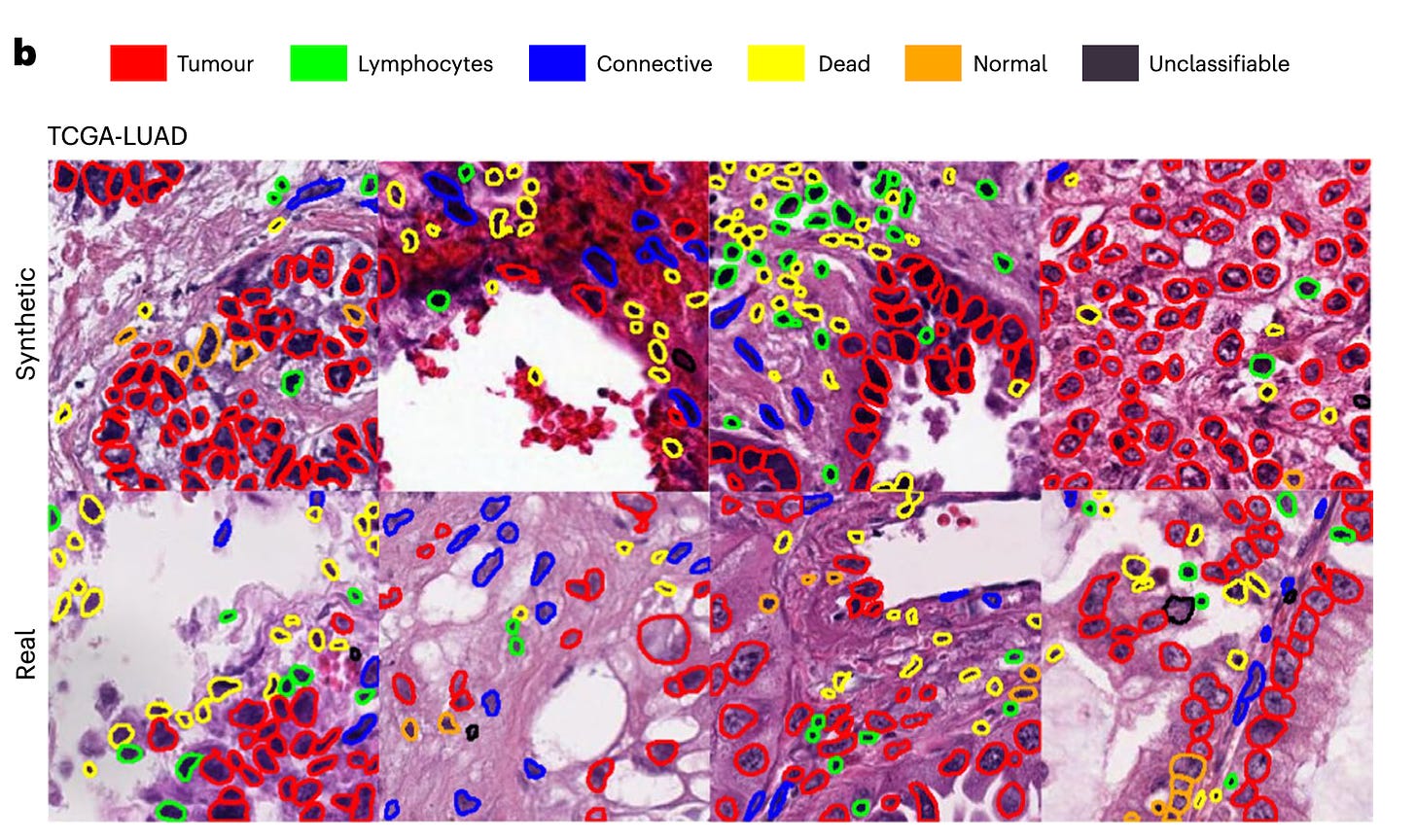

Oh, would you like to get a whole slide image from an RNA-seq? You can do that, too, from a diffusion model built by Olivier Gevaert and colleagues. It’s the DALL-E equivalent for generating path images. A real vs synthetic image set are shown below

Radiologists and A.I.

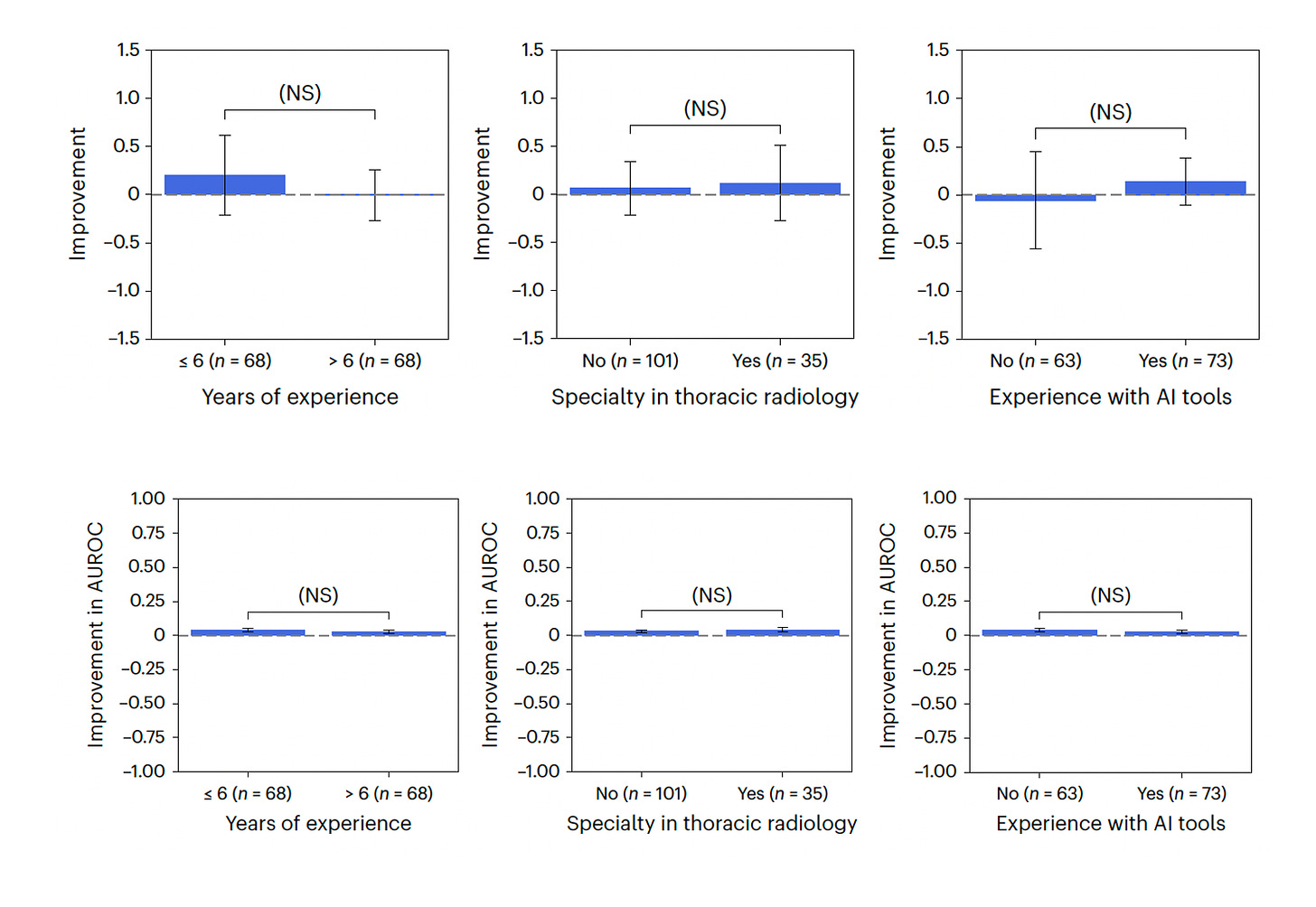

It’s never simple or straightforward when it comes to A.I. support, as we learned from Pranav Rajpurkar and colleagues in their paper this week that assessed 140 radiologists for performing 12 chest X-ray tasks. The incremental performance support with A.I. was highly variable and unpredictable. You would think the less experienced radiologists would derive more benefit from A.I. support, but that wasn’t the case, nor was specialty in chest radiology our experience with A.I. tools (Figure below). This study makes a case that individualized approaches may well be needed in order to promote the synergy between radiologists and A.I. tools, and that may be applicable across clinicians.

A second study evaluated a deep neural network performance compared with 15 radiologists for diagnosis of heart abnormalities (hypertrophy or dilation of the left ventricle) from chest X-rays. The A.I. accuracy exceeded all of the radiologists.

A.I. Health Misinformation

Four LLMs were assessed for their potential to convey health misinformation in a British Medical Journal study. The LLMs (GPT-4, PaLM2, Claude, and Llama) did not provide consistent information. For the question about sunscreen causing skin cancer, only Claude gave the right response (no they don’t). That was also true for an alkaline diet is a cure for cancer (it isn’t). For vaccines causing autism, or that hydroxychloroquine is a cure for Covid, all but GPT-4 gave the right answer (no, no). The overall results spoke well for Claude, with more safeguards, but clearly there’s potential for LLMs to propagate health misinformation. Further, the outputs can be used by individuals or entities to amplify these falsities. More use of reinforcement learning from human feedback (RLHF) and other strategies will be needed to get this on track.

Summary

A.I. is moving fast in the biomedical space. Liabilities are being identified, such as safety, bias, worsening health equity, and misinformation, and are starting to be addressed. More self-supervised, foundation medical models, in new disciplines, are showing up beyond the first one published for the retina last September. The power of discovering new drugs with great unmet need has now been independently replicated for antibiotics. The editors at Nature Medicine wrote a solid and succinct editorial this week entitled “How to support the transition to AI-powered healthcare” My take on it is that we can't get to A.I.-powered healthcare without compelling prospective clinical trial evidence in the real world of medical care, with diverse participants. That’s what we’re missing now, and hopefully will start to see soon. I am and remain optimistic for A.I.’s transformation of life science and medicine in the years ahead. It’s still early.

Thank you for reading and subscribing to Ground Truths.

Please share the post to your friends and colleagues if you found it useful.

All content on Ground Truths—newsletter analyses and podcasts—is free.

Voluntary paid subscriptions all go to support Scripps Research.

Ground Truths now has a YouTube channel for all the podcasts. Here’s a list of the people I’ve interviewed that includes a few that will soon be posted or are scheduled.

Good article and glad to have someone with clinical experience, research experience, and a passion for tech such as yourself at the table.

I recently learned of Perplexity AI, and I’ve tried it for a few clinical questions. It is still not as good as humanly doing the hard work of PubMed and reference book synthesis, but so much faster of course… and it cites references. They are real clickable references! Often from journal sources, but also from more watered down educational sites like WebMD.

Let us know if you have any experience with this one someday. It’s hard to keep up with the AI Cambrian explosion.

Extraordinary week, and extraordinary report, Eric. I don't know of any other observer/reporter in the medical AI space who's anywhere near as comprehensive.

When I spoke in December at the IHI Leadership Forum I said that medicine is having a "monolith moment," referring to "2001: A Space Odyssey." NOTHING is going to be the same now that we've touched it, and we all better fasten our seatbelts ... and LEARN about the new world we are already in. This column supports that analogy. WOW.