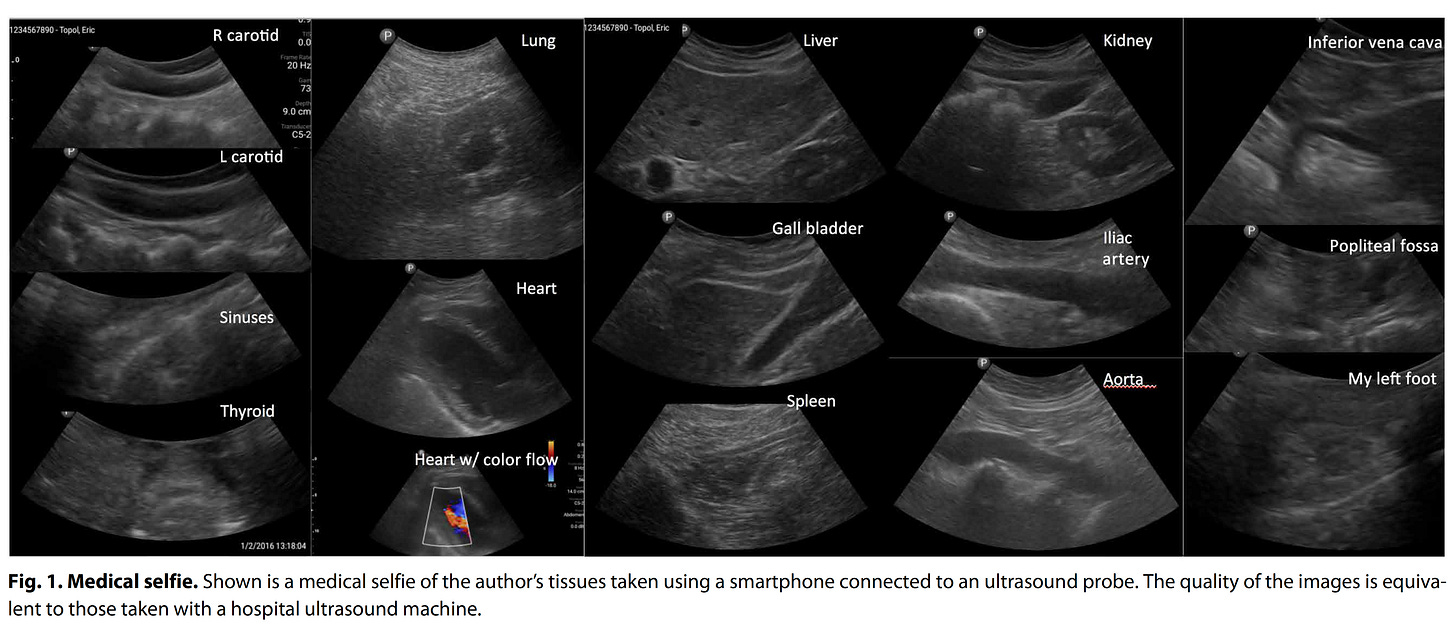

Several years ago I got my hands on a smartphone ultrasound device (a probe that attaches to the base of a smartphone) and was struck by the newfound ability to image any part of my body except the brain. I subsequently published the images (below) in a short piece reviewing the first decade of digital medicine.

The funny part of that sweep of imaging various organs is that my only prior experience with ultrasound was with the heart—echocardiography. So in order to image the gall bladder or the kidney, I had to resort to Google to watch a “how to” video. But the total body medical selfie took less than an hour to acquire all the images.

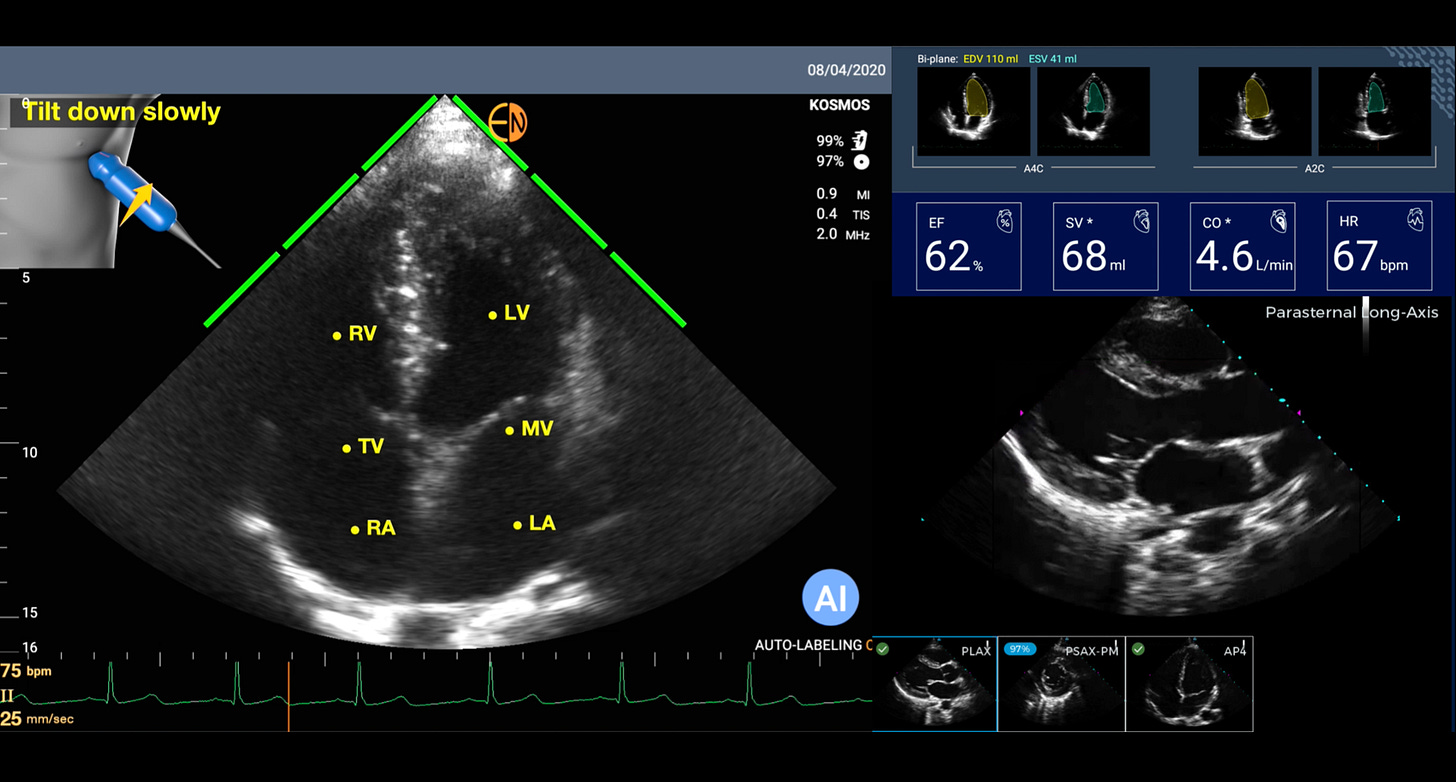

On the clinical side, as a cardiologist, I have used some form of handheld or smartphone ultrasound in place of a stethoscope since 2009. Why? It is obviously far more informative (Figure below) to see the heart, with the dimensions of its cavities, the thickness of the heart muscle, the strength of heart muscle contraction, the structure of the valves, and more, than to listening to heart sounds (something I used to love to do for decades).

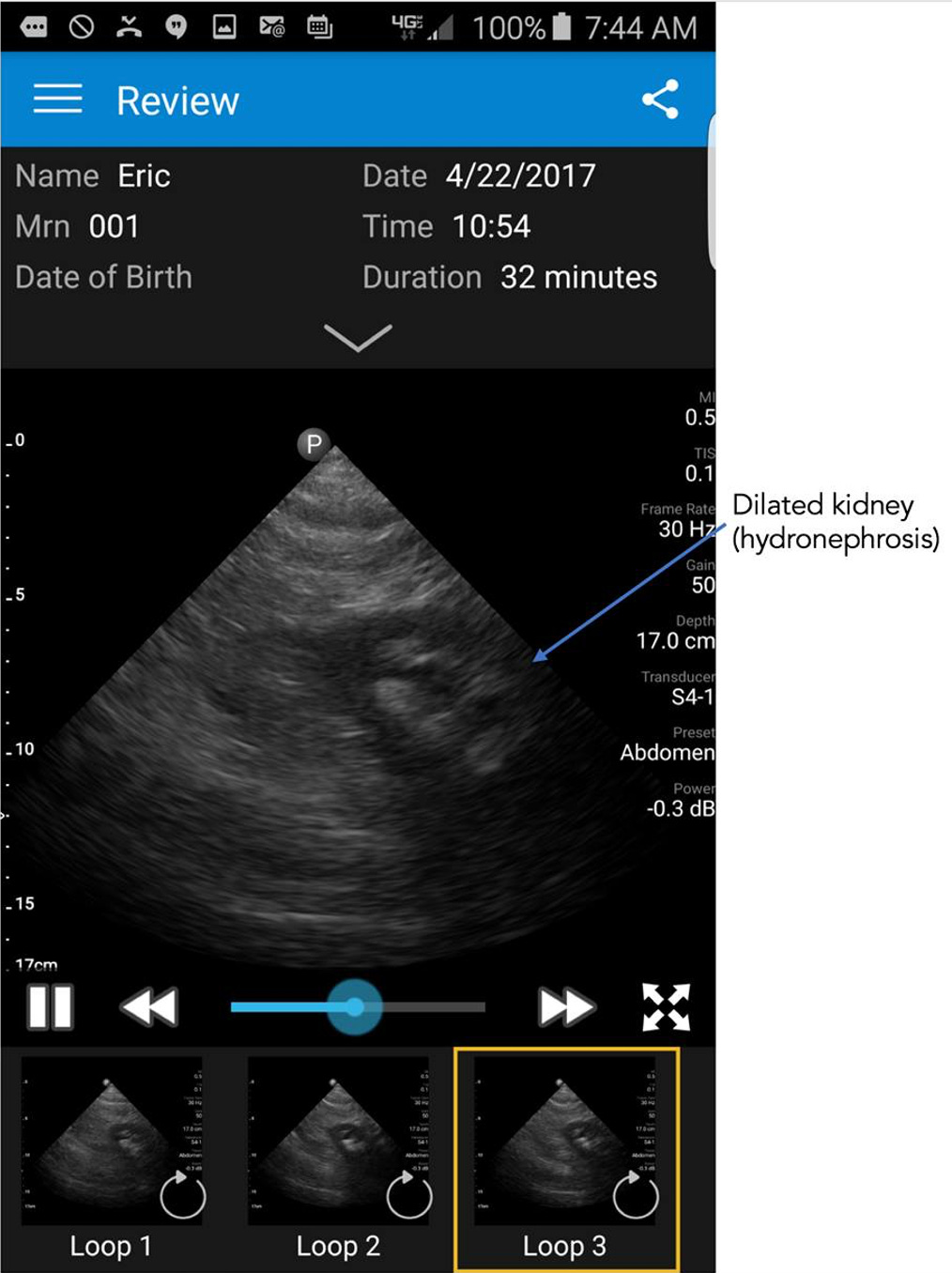

Ironically, months after that medical selfie I had abdominal pain that was severe and something I had not experienced before. So I got out the the smartphone ultrasound probe to image my abdomen and found that I had a dilated left kidney as seen below. Which led me to believe I had a kidney stone and was suffering from renal colic.

You can just imagine the reaction of the emergency department physician when I presented and said I have abdominal pain and a dilated left kidney, “do you want to see it?” Nonetheless, he sent me for a CT scan which showed similar dilation of the kidney and localized the stone.

To date, smartphone ultrasound has not caught on much in the United States for several reasons. There’s no reimbursement code for physicians to acquire the images or an easy mechanism to place them in the electronic medical record. In primary care and many specialties, there is a lack of experience in acquiring images, fully dependent on referring patients to the ultrasound lab for formal studies by sonographers. Image quality in the early days (circa 2010) of handheld ultrasound was poor, but it has markedly improved now and in most patients (in skilled hands) comparable to expensive ultrasound machines. The ultrasound probe devices that attach to smartphones are much too expensive, ranging from $2000 to $8000 with many requiring monthly subscription services to use the app. But the amount of money saved by not referring patients to an ultrasound lab would easily pay for these devices in a system with universal health care. In the United States there is no incentive.

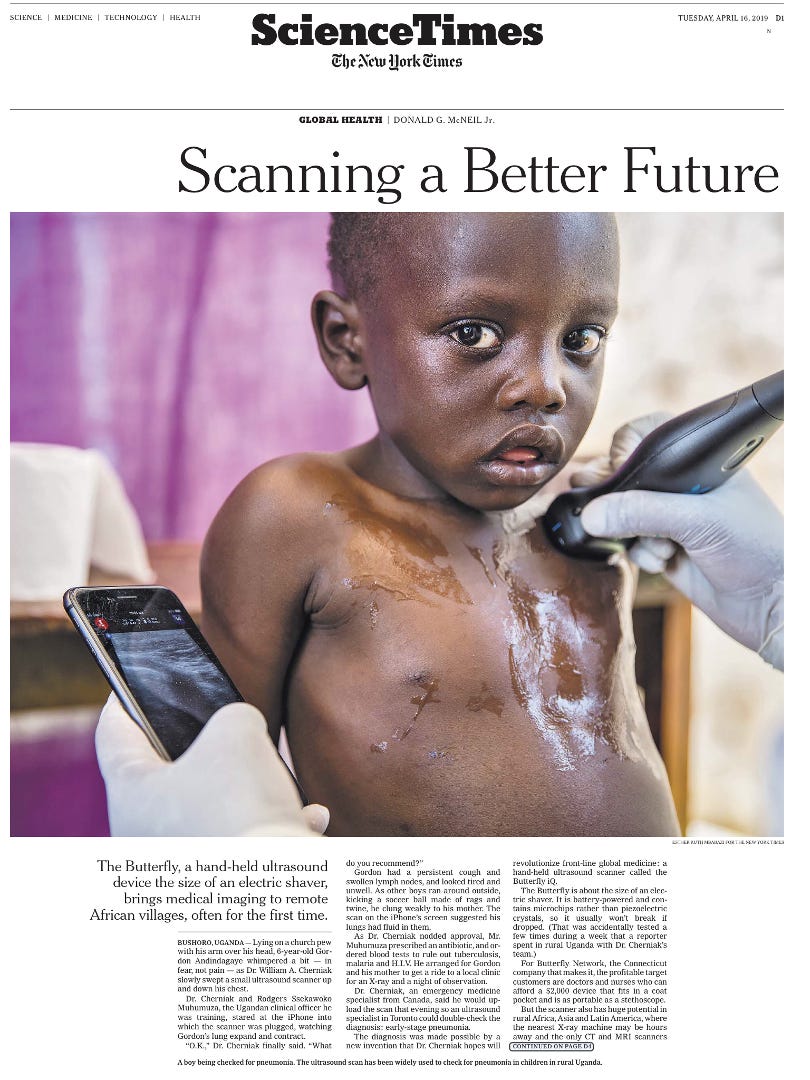

But that has not deterred the adoption of smartphone ultrasound in the hinterlands.

The New York Times piece in 2019 above showed its use in remote Uganda villages for diverse applications such as diagnosing pneumonia, tuberculosis, fetal monitoring and more. I find this a particularly good example for how medical technology can help reduce inequities, here making high resolution ultrasound imaging possible in low income countries that would not have access. The images could potentially be obtained by untrained personnel and sent to experts for interpretation.

But now with A.I. that has changed. For the heart, there are a number of AI tools that will guide a person with no echocardiographic knowledge for acquiring images or interpreting them. All that is needed is for a person to put the smartphone probe on the left side of the chest. Then the AI can tell the person to move it up or down, or clock, or counterclockwise, until it “sees” the heart image and automatically captures it a short video loop. And then automatically labels the structures and provides an interpretation. This sets up the potential for anyone to perform a screening echocardiogram and get an initial reading of it. An example is shown below with automated labeling (LV=left ventricle, LA=left atrium, MV=mitral valve) and providing the ejection fraction (EF%) and other outputs. If this can be done with the heart, the most challenging organ to image because of its motion, you can imagine how much easier it would be for many other organs when just a static shot is needed (like the kidney photo above).

In recent months there have been a number of reports of patient self-imaging for specific organs including the lungs, heart and breasts. Monitoring of high-risk patients with heart failure has been suggested as an ideal use case. It’s in the very early days and not practical when the cost of the ultrasound probes are so high. But if the probes could come down to the $100 cost threshold, the opportunity for self-imaging for various medical conditions could be extraordinary. Obviously, there would have to be full validation of the algorithms for acquisition and interpretation against gold standard sonography before this could be ready and scalable. There are clear downsides to democratizing smartphone ultrasound. Just think of an expectant mother who performs very frequent ultrasound imaging of her fetus. Like everything in medicine and technology, there are important tradeoffs to consider and risks to preempt or minimize.

When people talk about digital medicine they typically think about sensors for physiologic parameters like heart rate and rhythm or for chemistry parameters such as glucose. But there is another dimension that is in its earliest stages—ultrasound imaging. It will take awhile for this to get mainstream, but hopefully from this post you can see its exciting potential.