The amazing power of "machine eyes"

The unanticipated deep learning A.I. dividends for medicine

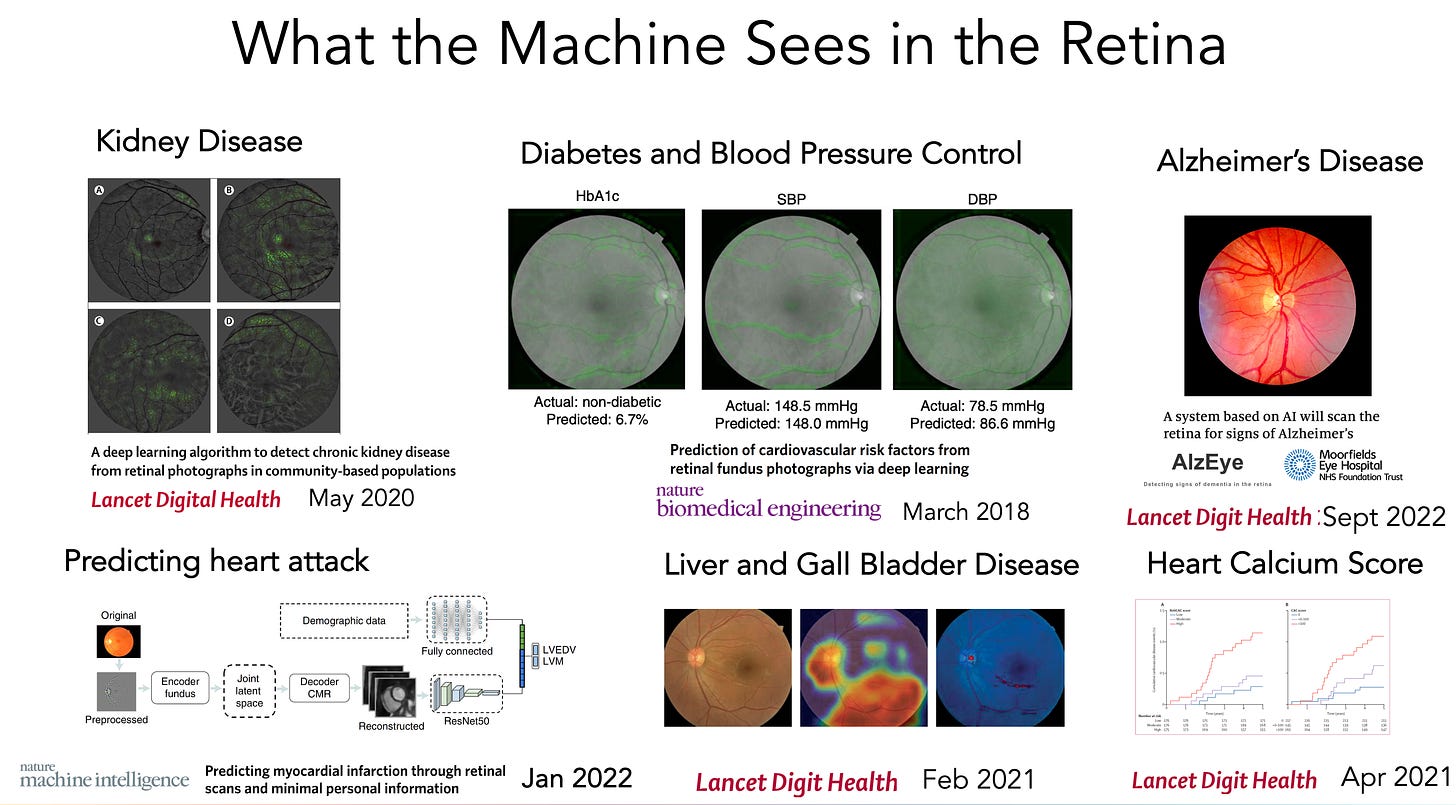

Today’s report on AI of retinal vessel images to help predict the risk of heart attack and stroke, from over 65,000 UK Biobank participants, reinforces a growing body of evidence that deep neural networks can be trained to “interpret” medical images far beyond what was anticipated. Add that finding to last week’s multinational study of deep learning of retinal photos to detect Alzheimer’s disease with good accuracy. In this post I am going to briefly review what has already been gleaned from 2 classic medical images—the retina and the electrocardiogram (ECG)—as representative for the exciting capability of machine vision to “see” well beyond human limits. Obviously, machines aren’t really seeing or interpreting and don’t have eyes in the human sense, but they sure can be trained from hundreds of thousand (or millions) of images to come up with outputs that are extraordinary. I hope when you’ve read this you’ll agree this is a particularly striking advance, which has not yet been actualized in medical practice, but has enormous potential.

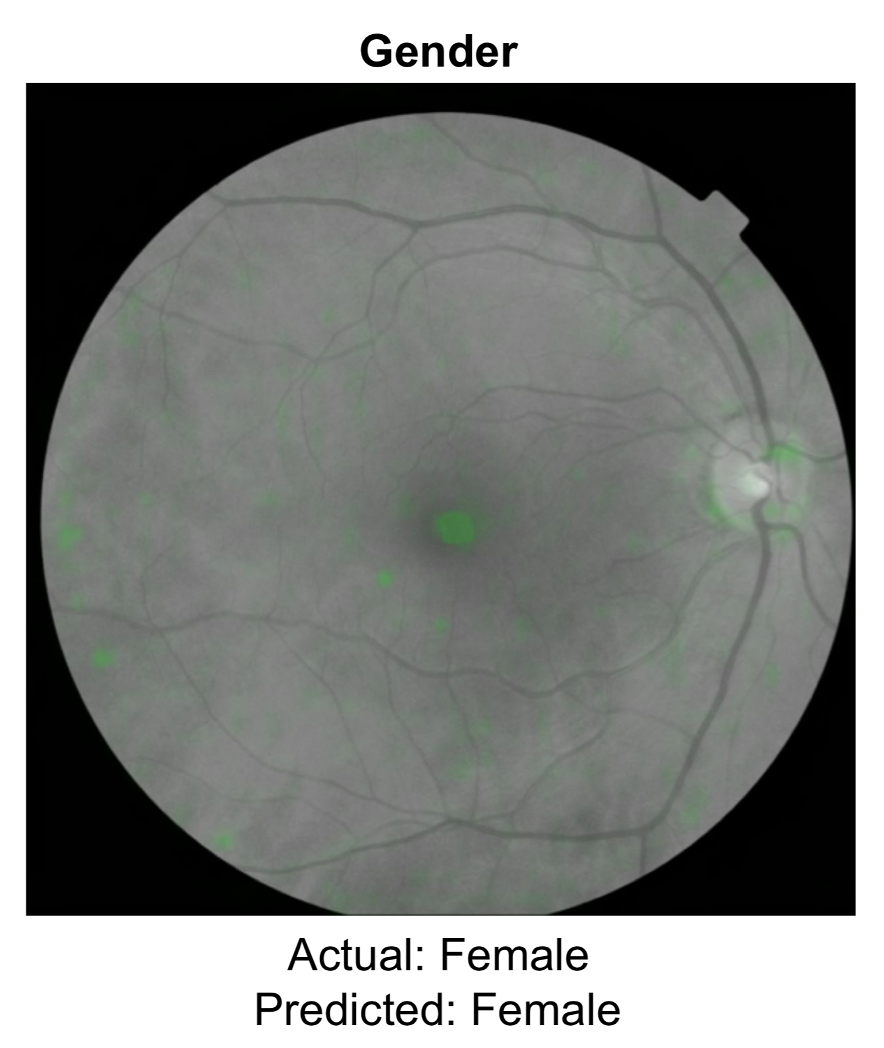

First, a review on deep learning from the retina. We should have known a few years back that something was rich (dare I say eye-opening) about the retina that humans, including retinal experts, couldn’t see. While there are far simpler ways to determine gender, it’s a 50-50 toss up for ophthalmologists, which means there are no visible cues to human eyes. But now two models have shown 97% accuracy of gender determination from neural network training. That was just the beginning.

Of course, AI models have been shown to be quite useful for detecting eye diseases, such as diabetic retinopathy. But this is about the indirects, the not so obvious. That work has now extended to detection of kidney disease, control of blood glucose and blood pressure, hepatobiliary disease, a previous study on predicting heart attack, close correlation of the retinal vessels with the heart (coronary) artery calcium score, and, prior to the new report above, the ongoing prospective assessment and tracking of Alzheimer’s disease (“AlzEye,” Moorfields Eye Institute, UK, led by Professor Pearse Keane).

Nearly all of this work has been published in the last couple of years, and there’s no telling what else can be seen by machines that would be invisible to human eyes. Therein lies the future potential for taking a photo of your retina via your smartphone (ideally without having to dilate your pupils) to get an accurate readout of many of your body systems/ organ functions.

Let’s turn to the ECG. As a cardiologist I’ve been reading these for over 35 years and I enjoy that, quickly recognizing patterns like rhythm abnormalities, enlargement of the heart, low voltage, or pericarditis. But I am no match for machine eyes. Here are the first crop of deep learning ECG reports which surprised me. I could never estimate a person’s age or sex, or their hemoglobin, by looking at an ECG, and it would be challenging to come up with an accurate assessment of a person’s ejection fraction, the main metric used for heart pump function.

But there’s more. Recently deep neural networks of ECGs have been trained to pick up valve disease, diabetes, predict atrial fibrillation that has risk of stroke, and pretty accurately predict the filling pressure (pulmonary capillary wedge) of the left ventricle (the heart’s main pumping chamber).

For the retina and the ECG, none of these machine vision capabilities have been put into practice with one major exception—the ejection fraction. Mayo Clinic did a randomized trial with primary care physicians, giving half the enhanced machine reading and the other group, with only standard machine reads, serving as controls. The accuracy of detecting patients with low ejection fraction was improved and this health system now provides these deep learning outputs routinely. Raising awareness for difficult diagnoses such as amyloid or hypertrophic cardiomyopathy is also getting incorporated in the leading edge Mayo ECG interpretations.

I’m using the retina and ECG as examples, but the power of machine eyes extends to virtually all forms of medical images that have been assessed to date. For gastroenterologists, multiple randomized trials have shown better detection of polyps with real-time machine vision than by expert human eyes. For pathologists, there is the ability to extract hidden features on digitized slides (whole slide imaging), such as with cancer, determining the driver mutations, structural genomic variations, primary origin of the tumor, likely response to therapy, and prognosis—none of which could be seen by pathologists, and nicely reviewed here. I could go on and on with more examples, but I’ll spare you of that!

Now, with such advances, there have to be caveats and limitations acknowledged. Except for the few randomized trials noted (enhanced ECG and colonoscopy), all of these reports are based on retrospective analyses. While these are useful, in silico analysis of complete, “cleaned” datasets is quite different from prospective assessment on the real world of clinical medicine. Accordingly, results of retrospective reports should be considered as hypotheses-generating and need to be confirmed by either prospective and/or randomized clinical trials.

The other concern is the centering on man vs machine, which is an outgrowth of the world of AI dating back well before Garry Kasparov took on Deep Blue for chess in 1997. While it is convincing that machines “see” things that expert clinicians can’t see from multiple studies, there’s been no assessment of what is missed by machines, but detected by humans. In medical practice, the context of seeing the patient, reviewing the records, and much other context is a big edge that is not currently embedded into machine vision of medical images. This is one bit part of applying multimodal AI, which my colleagues and I recently reviewed in Nature Medicine, the next frontier of AI integrating multiple layers of data from disparate sources.

Remember that images are the sweet spot from deep learning, and we are further behind, especially in medicine, for having similar capabilities in speech and text. But the recent, rapid progress with foundation models outside of healthcare, that are handling in excess of a trillion parameters, and now appearing in life science, will undoubtedly accelerate these prospects.

In summary, I think this is a very exciting area in medicine that lets one’s imagination run wild as to what machines can and ultimately will be taught to see—thinking big. This brief summary also allows me to get back to the area of my primary interest rather than dwelling on Covidology, when there is, at present, a lull in the level of circulating virus threat. Indeed, I look forward to not having to try and cover Covid at some point in the future and writing much more about the enthralling parts of biomedicine.

Thanks for reading.