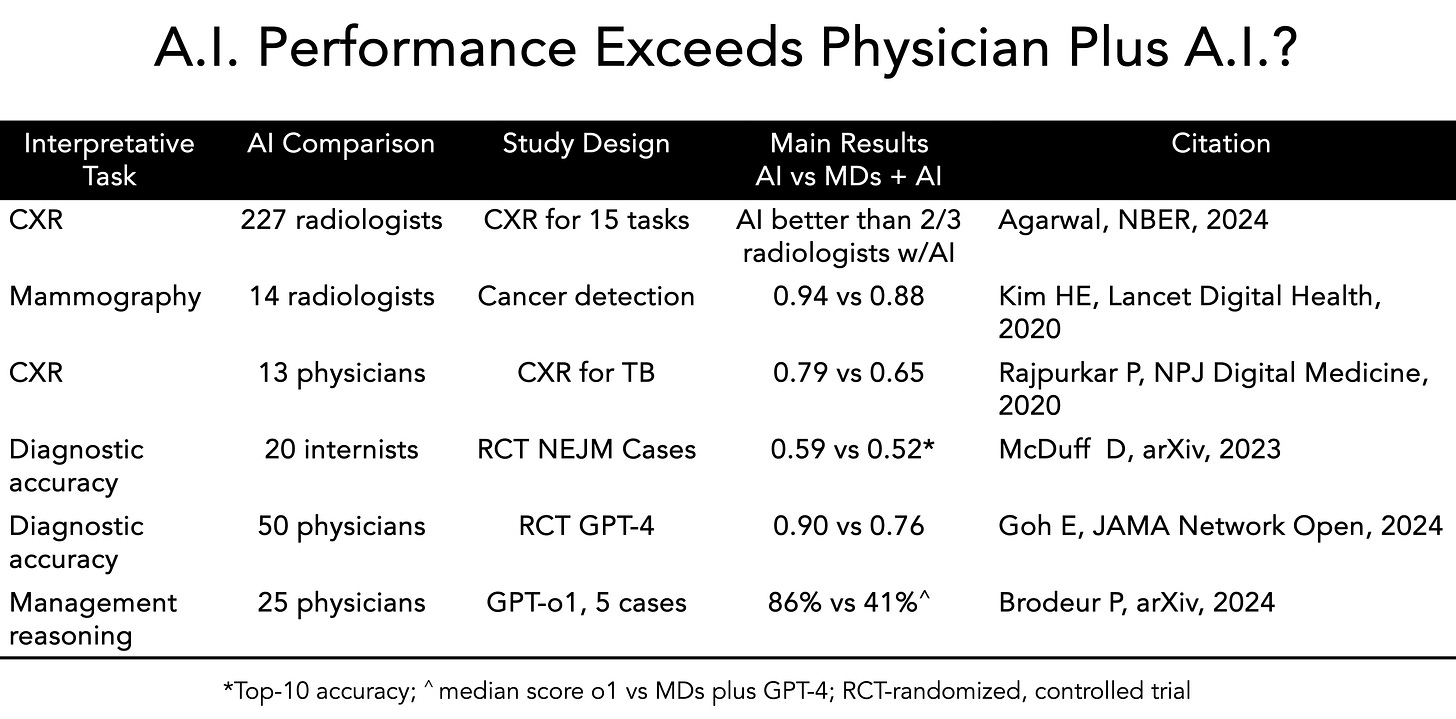

Our op-ed in today's NY Times explores an unexpected finding. A series of recent studies compared the performance of doctors with A.I. versus A.I. alone, spanning medical scans, diagnostic accuracy, and management reasoning. Surprisingly, in many cases, A.I. systems working independently performed better than when combined with physician input. This pattern emerged consistently across different medical tasks, from chest X-ray and mammography interpretation to clinical decision-making. In some of the studies, summarized in the Table below, the gap for performance favoring A.I. alone was large.

All along most of us in the medical community had anticipated the combination would achieve superior results. What explains these counterintuitive findings? They could simply reflect that physicians haven't been well grounded in using A.I., or have "automation neglect" (bias against A.I.), or that the studies are relatively small and contrived—attempts at simulating medical practice but a far cry from the complex, messy world of how we diagnose and care for patients. But there may be a more fundamental consideration: we may need to rethink how we divide responsibilities between human physicians and A.I. systems to achieve the goal of synergy (not just additivity, i.e. 1+1 = 5).

Rather than assuming that combining both approaches always yields better results, we should carefully consider which tasks are better suited for A.I., which for humans, and which truly benefit from collaboration. The path forward isn't about replacing physicians with A.I., but rather about finding the optimal partnership model. This might mean allowing A.I. to handle initial screening while having physicians focus on complex cases where contextual understanding is crucial.

As we navigate this unexpected territory, one thing becomes clear: the future of medicine won't be shaped by a simple choice between human or artificial intelligence, but by our ability to understand their respective strengths and limitations, and to orchestrate their collaboration in ways that truly benefit patient care.

The challenge ahead isn't just technological – it's about reimagining the role of both physician and A.I. in healthcare delivery. While headlines might suggest a future of "Robot Doctors" (as the Times titled our piece, which was certainly not our choice!), the evidence points to something more nuanced: finding the right balance between human expertise and artificial intelligence in ways that enhance and transform medical practice. This may require us to let go of some preconceptions and embrace new models that might initially feel counterintuitive but ultimately lead to better patient outcomes.

Here’s the piece with more than the brief summary above. Hopefully you’ll find it interesting, with links to other studies that complement the ones in the Table. It’s obviously a work in progress since we’re still very early in the assessment of large language model integration to the practice of medicine.

Opinion | When A.I. Alone Outperforms the Human-A.I. Partnership

Here is the Gift link to our NYT op-ed

The text with links follows:

The rapid rise in artificial intelligence has created intense discussions in many industries over what kind of role these tools can and should play — and health care has been no exception. The medical community largely anticipated that combining the abilities of doctors and A.I. would be the best of both worlds, leading to more accurate diagnoses and more efficient care.

That assumption might prove to be incorrect. A growing body of research suggests that A.I. is outperforming doctors, even when they use it as a tool.

A recent M.I.T.-Harvard study, of which one of us, Dr. Rajpurkar, is an author, examined how radiologists diagnose potential diseases from chest X-rays. The study found that when radiologists were shown A.I. predictions about the likelihood of disease, they often undervalued the A.I. input compared to their own judgment. The doctors stuck to their initial impressions even when the A.I. was correct, which led them to make less accurate diagnoses. Another trial yielded a similar result: When A.I. worked independently to diagnose patients, it achieved 92 percent accuracy, while physicians using A.I. assistance were only 76 percent accurate — barely better than the 74 percent they achieved without A.I.

This research is early and may evolve. But the findings more broadly indicate that right now, simply giving physicians A.I. tools and expecting automatic improvements doesn’t work. Physicians aren’t completely comfortable with A.I. and still doubt its utility, even if it could demonstrably improve patient care.

But A.I. will forge ahead, and the best thing for the medicine to do is to find a role for it that doctors can trust. The solution, we believe, is a deliberate division of labor. Instead of forcing both human doctors and A.I. to review every case side by side and trying to turn A.I. into a kind of shadow physician, a more effective approach is to let A.I. operate independently on suitable tasks so that physicians can focus their expertise where it matters most.

What might this division of labor look like? Research points to three distinct approaches. In the first model, physicians start by interviewing patients and conducting physical examinations to gather medical information. A Harvard-Stanford study that Dr. Rajpurkar helped write demonstrates why this sequence matters — when A.I. systems attempted to gather patient information through direct interviews, their diagnostic accuracy plummeted — in one case from 82 percent to 63 percent. The study revealed that A.I. still struggles with guiding natural conversations and knowing which follow-up questions will yield crucial diagnostic information. By having doctors gather this clinical data first, A.I. can then apply pattern recognition to analyze that information and suggest potential diagnoses.

In another approach, A.I. begins with analyzing medical data and suggesting possible diagnoses and treatment plans. A.I. seems to have a natural penchant for such tasks: A 2024 study showed that OpenAI’s latest models perform well at complex critical thinking tasks like generating diagnoses and managing health conditions when tested on case studies, medical literature and patient scenarios. The physician’s role is to then apply his clinical judgment to turn A.I.’s suggestions into a treatment plan, adjusting the recommendations based on a patient’s physical limitations, insurance coverage and health care resources.

The most radical model might be complete separation: having A.I. handle certain routine cases independently (like normal chest X-rays or low-risk mammograms), while doctors focus on more complex disorders or rare conditions with atypical features.

Early evidence suggests this approach can work well in specific contexts. A Danish study published last year found that an A.I. system could reliably identify about half of all normal chest X-rays, freeing up radiologists to devote more time to studying images that were deemed suspicious. In a landmark Swedish trial involving mammograms for more than 80,000 women, half the scans were assessed by two radiologists, as is usual. The other half were evaluated by A.I.-supported screening first, followed by additional review by one radiologist (and in rarer instances where the A.I. determined an elevated risk, by two radiologists). The A.I.-assisted approach led to the identification of 20 percent more breast cancers while reducing the overall radiologist workload almost in half.

This might be the clearest path to dealing with the shortage of health care workers hurting medicine. This model is particularly promising for underserved areas, where A.I. systems could provide initial screening and triage, so limited specialist resources can be redirected to more pressing issues.

All these approaches raise questions about liability, regulation and the need for ongoing clinician education. Medical training will need to adapt to help doctors understand not just how to use A.I., but when to rely on it and when to trust their own judgment. Perhaps most important, we still lack definitive proof that these approaches, tested in research studies or pilot programs, will achieve the same success in the messy realities of everyday care.

But the promise for patients is obvious: fewer bottlenecks, shorter waits and potentially better outcomes. For doctors, there’s potential for A.I. to alleviate the routine burdens so that health care might become more accurate, efficient and — paradoxically — more human.

****************************************************

Thanks for reading and subscribing to Ground Truths.

If you found this interesting please share it!

That makes the work involved in putting these together especially worthwhile.

All content on Ground Truths—its newsletters, analyses, and podcasts, are free, open-access.

Paid subscriptions are voluntary and all proceeds from them go to support Scripps Research. They do allow for posting comments and questions, which I do my best to respond to. Many thanks to those who have contributed—they have greatly helped fund our summer internship programs for the past two years.

The challenging truth is that many physicians get stuck on their initial diagnosis and won't let go, even when there is evidence to the contrary. This is a powerful and sometimes dangerous bias. Instead of approaching it as, "AI is highly likely to be right, let me see if there are any reasons it might be wrong in this case," doctors tend to say, "I think it's X and the AI is wrong."

You project a worthwhile objective to develop over the near term. What about 10-15 years from now:

Suppose you need a surgical procedure (say 15 years from now), perhaps brain surgery. You now have a choice: do you want to be operated on by a human surgeon or a surgical robot?

Since the robot is networked into 1000+ other surgical robots around the world, it has their collective experience. Also, the robot has specialized limbs for both sensing and operating, and has fast reflexes. Lots of unknowns about the human surgeon, like did she get enough sleep last night, did she argue with her spouse or kids this morning, is she feeling a little depressed, etc. Were her previous surgeries acceptable, but not optimal.