In the journal Nature today, my colleagues and I published an article on the future directions of generative A.I. (aka Large Language or Foundation models) for the practice of medicine. These new AI models have generated a multitude of new and exciting opportunities in healthcare that we didn’t have before, along with many challenges and liabilities. I’ll briefly explain how we got here and what’s in store.

The Transformer model that transformed AI

Back in 2017, Google researchers published a paper (“Attention Is All You Need”) describing a new model architecture, which they dubbed Transformer, that could give different levels of attention for multiple modes of input, and go faster, to ultimately replace recurrent and convolutional deep neural networks (RNN and CNN, respectively). Foreshadowing the future to Generative AI, they concluded: “We plan to extend the Transformer to problems involving input and output modalities other than text and to investigate local, restricted attention mechanisms to efficiently handle large inputs and outputs such as images, audio and video.”

As I recently reviewed, AI in healthcare to date has been narrow, unimodal, single task. For the over 500 FDA cleared or approved AI algorithms, almost all are for 1 or at most 2 tasks. To go beyond that, we needed a model that is capable of ingesting multimodal data, with attention to the relative importance of inputs. This also required massive graphic processing unit/computational power and the concurrent advances in self-supervised learning reviewed here. These building blocks—the Transformer model architecture, GPUs, self-supervised learning, and multimodal data inputs at massive scale—ultimately led to where we are today. GPT-4 is the most advanced LLM and we’re still at a very early stage.

By now, you likely have had conversations with ChatGPT and seen what it can do. It’s “only” got text/language input and outputs (it’s unimodal) and a training data cutoff in 2021. Yet, you’ve probably had fun interacting with it and been impressed with the rapidity and sometimes remarkable accuracy and fluency of its outputs. It often makes old Google searches seem weak. That’s just a warm-up act.

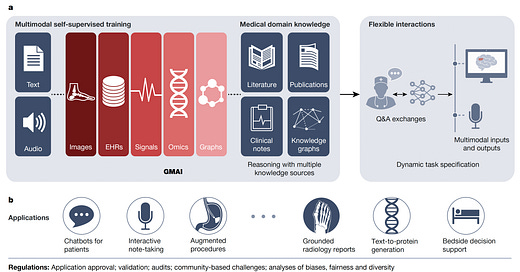

What It Looks Like in Medicine: GMAI

Good-bye to a constrained phase of just medical images, the sweet spot of deep learning. Hello to the full breadth of data from electronic health records, sensors, images, labs, genomics and biologic layers of data, along with all forms of text and speech. The domains of knowledge include the publications, books, the corpus of literature, and the network relationship of entities (knowledge graphs). GPT-4 is the first multimodal large language model, facile with text/language and images.

That’s why you can already converse so well with generative AI for medical matters, even though there are none yet accessible that have been pre-trained with the corpus of the medical literature and multi-dimensional data from millions of patients. The transformer architecture, which is ideally suited for those inputs, enables solving problems previously unseen, learning from other inputs and tasks. We called this GMAI for Generalist Medical AI.

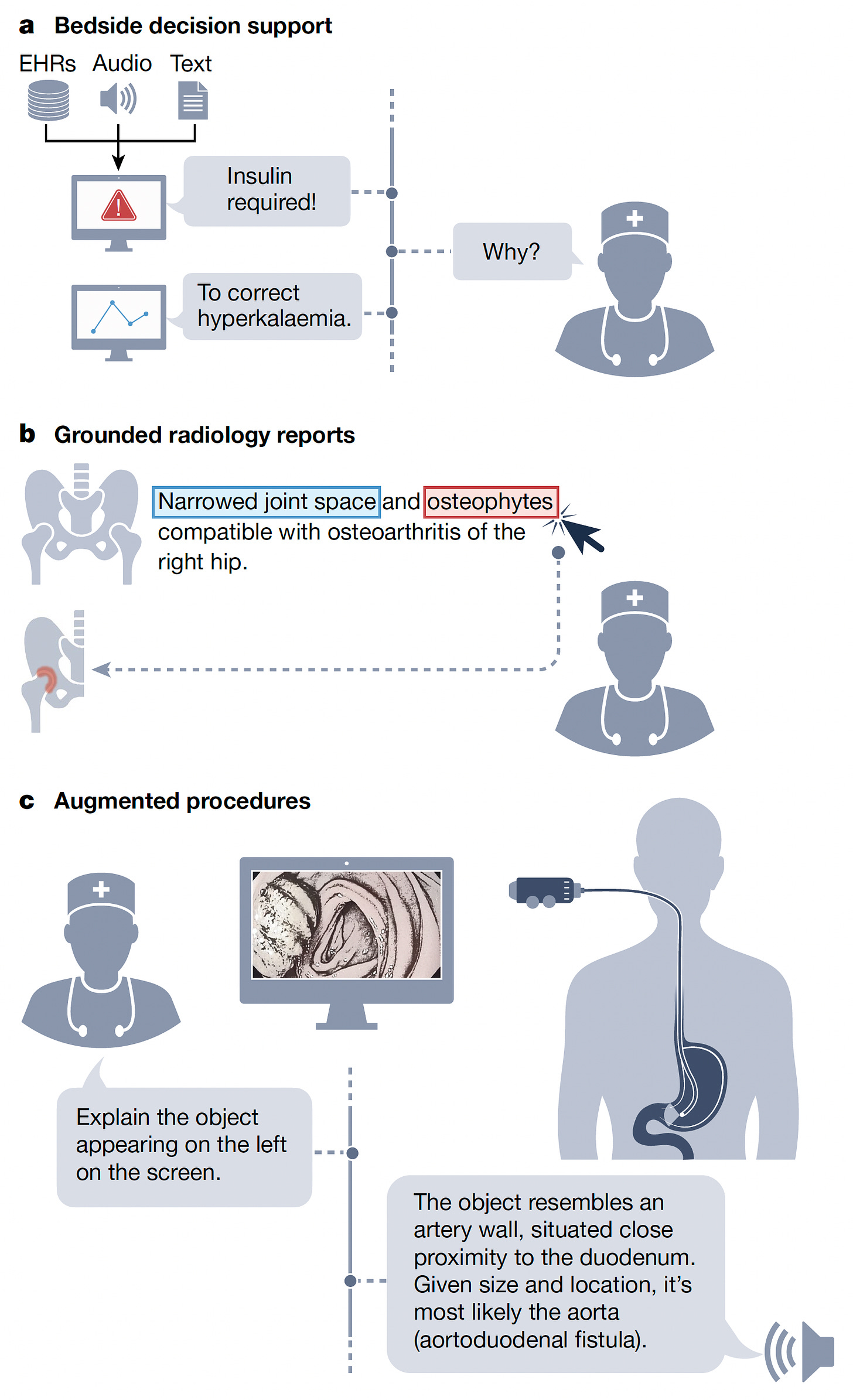

It sets up remarkably flexible interactions and a very long list of possible applications, with a few examples shown below. Patients asking questions about their symptoms and data. As we wrote in the paper: ”GMAI can build a holistic view of a patient’s condition using multiple modalities, ranging from unstructured descriptions of symptoms to continuous glucose monitor readings to patient-provided medication logs.”

The potential for clinical keyboard liberation via automated generation of notes, discharge summaries, pre-authorization, and all the other forms of clinical documentation. A surgeon asking to identify something in the operative field (augmented procedure). A CT scan report generated that spotlights the image abnormality or the image being queried about a particular area of concern (grounded report), or quantifying the difference of something between images taken at different times and how does that compare to progression of a condition in the medical literature. For bedside rounds and related LLM support for physicians, besides the illustration below for administering insulin, see the fictionalized Grabber Prologue summarized in the section of the new GPT-4 book I just reviewed. The GPT-4 book, with 6 months of testing this LLM, provides many more examples of its capabilities in medicine.

Parenthetically, I found this glossary of terms is very helpful to get up to speed if you haven’t been following this space closely.

But

While there are so many exciting potential use cases, and we’re still not even into Chapter One in the GMAI story, there are striking liabilities and challenges that have to be dealt with. The “hallucinations” (aka fabrications or BS) are a major issue, along with bias, misinformation, lack of validation in prospective clinical trials, privacy and security and deep concerns about regulatory issues. These points are reviewed in the paper and my previous posts. In medicine, it is absolutely essential that there is a human-in-the-loop providing oversight of any LLM output, knowing full well it is prone to making serious mistakes, which includes making things up.

The technical challenges are formidable. One sentence in the paper provides some context: PaLM, a $40 billion-parameter model developed by Google, required an estimated 8.4 million hours’ worth of tensor processing unit v4 chips for training, using roughly 3,000 to 6,000 chips at a time, amounting to millions of dollars in computational costs.” Data collection, inputting of massive, diverse, organized data, training of models, comprehensive validation, and deployment are some of the many daunting aspects of scaling LLMs for real world use.

An Anecdote in the “Old” Era of ChatGPT

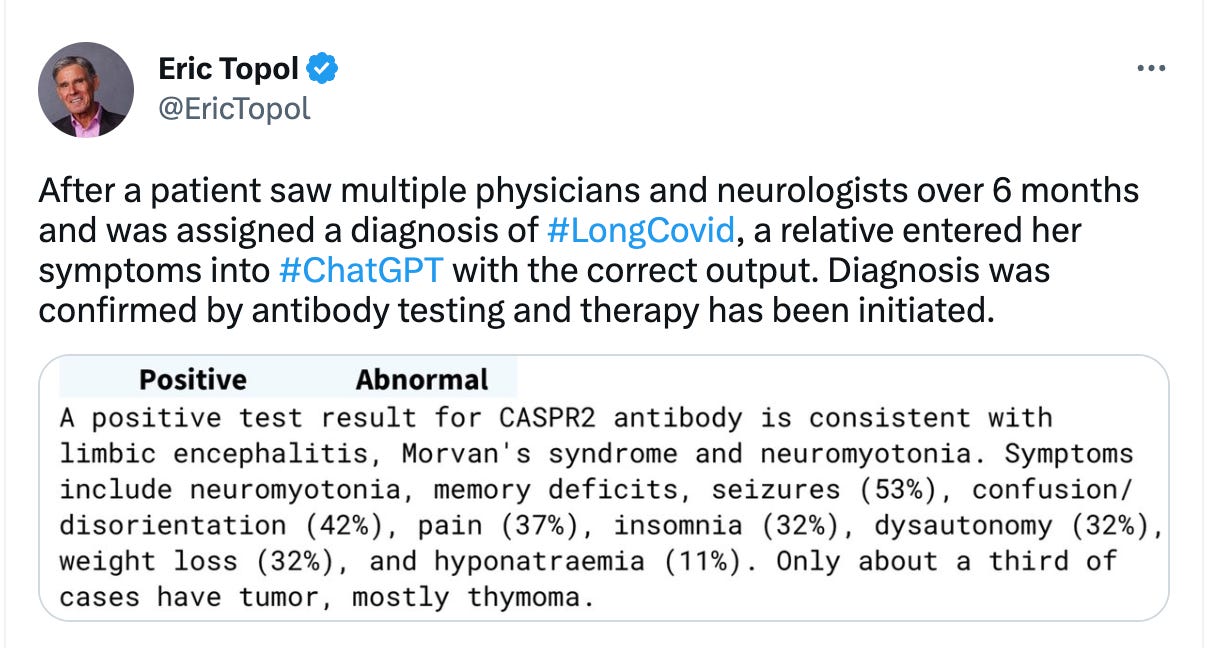

I recently posted a ChatGPT anecdote sent to me by a friend whose relative was diagnosed with limbic encephalitis after having carried the diagnosis of Long Covid.

I had already seen many examples of GPT-4 making difficult diagnoses of rare conditions from the book. Before posting, I asked ChatGPT more on this matter, since I knew nothing about this CASPR2 antibody test and whether it differentiated Long Covid, which has no validated treatment, from this form of autoimmune encephalitis, which is treatable. You can see the output below that convinced me this was interesting, illustrating the potential value of a LLM for a 2nd opinion. Any (in this case second) opinion, be it by an expert physician or a machine, requires very careful consideration for authenticity and accuracy.

I want to thank my co-authors for the Nature paper: Michael Moor, Oishi Banerjee, Zahra Abad, Harlan Krumholz, Jure Leskovec, and Pranav Rajpurkar. We brainstormed about where generative AI can take us in medicine, and tried to acknowledge its many liabilities and challenges. There’s a long road ahead to get this story told and keep the guard rails on such a powerful tool.

We concluded the paper with this statement: “Ultimately, GMAI promises unprecedented possibilities for healthcare, supporting clinicians amid a range of essential tasks, overcoming communication barriers, making high-quality care more widely accessible, and reducing the administrative burden on clinicians to allow them to spend more time with patients.”

Thanks for reading and subscribing to Ground Truths.

The proceeds from all paid subscriptions will be donated to Scripps Research.