In recent days, there have been many notable points of progress in medical A.I., which collectively represent another jump since my review earlier this summer (here and here). The topics include randomized trials, a major foundation model using retinal images, medical diagnoses and conversations, and life science applications.

A Review of Medical A.I Randomized Trials

One of the deficiencies of the field has been the lack of compelling data to demonstrate unequivocal benefit and the tradeoff of risks for AI in medicine, which can be established through prospective clinical trials. This week we posted a preprint review of the 84 randomized controlled trials (RCTs) in medical practice to date (through August 2023), which represents far more progress than has been generally appreciated. Whereas a few years ago most of these had been in conducted in China and were for detection of polyps during endoscopy and colonoscopy procedures, there has been marked expansion to many other specialties and around the world (Figure below).

Although trials in gastroenterology were still the most common (42%), radiology (15%), surgery (6%), and cardiology (6%) were part of the mix. Most of the trials randomized between AI assisted vs unassisted. Within 4 general categories of practice (care management, clinical decision making, diagnostic accuracy and patient behavior), 82% reported positive results for significant improvement.

By far the largest trial to date was for breast cancer detection with over 80,000 participants in Sweden. A.I. assisted reading reduced workload by 44% with no increase in false positive rate (1.5%) and a small increase in cancer detection rate (6.1 vs 5.1 per 1000 participants screened for AI assisted and controls, respectively). These results were reinforced by a new report last week, also from Sweden, from a prospective (non-randomized) study of over 55,000 women which showed the A.I. addition to detect 4% more cancers, with significantly less false positive results (range of 6 to 55%) and required 50% less radiologist reading time. That last bit is particularly impressive.

While the breast cancer study results are encouraging, A.I. for colon cancer, which equates to machine vision during colonoscopy, has raised some questions. In a new meta-analysis review of the 21 trials of colon adenoma, A.I. increased the detection rate from 36% to 44% but the polyps found by machine vision were small and didn’t change detection of advanced, clinically significant adenomas that are >10 mm and have high-grade dysplasia. That led to some unnecessary biopsies in the A.I. intervention group. A separate large randomized trial in over 3,200 people with a positive fecal occult blood test confirmed the data from the meta-analysis. Given the high proportion of RCTs that are colonoscopy trials, this limitation of lacking clinical significance is important to underscore.

A similar example of this comes from a large experience with a skin AI app for detection of cancer, from a study of nearly 19,000 people in the Netherlands who used the app compared with matched controls. There was a 32% increase in potential skin cancers detected, but they were mostly determined to be benign. The study was based on claims data (a substantial limitation), and while there was a net of more cancers detected, the cost for detecting one additional pre-malignant skin lesion was very high (over $2700). All of these findings point to the need for prospective and randomized trials that assess clinical outcomes, not just surrogate endpoints (like a polyp or skin lesion). While many more RCTs have been done than expected, they still, for the most part, lack the compelling evidence needed for broad adoption and implementation, no less reimbursement from insurers.

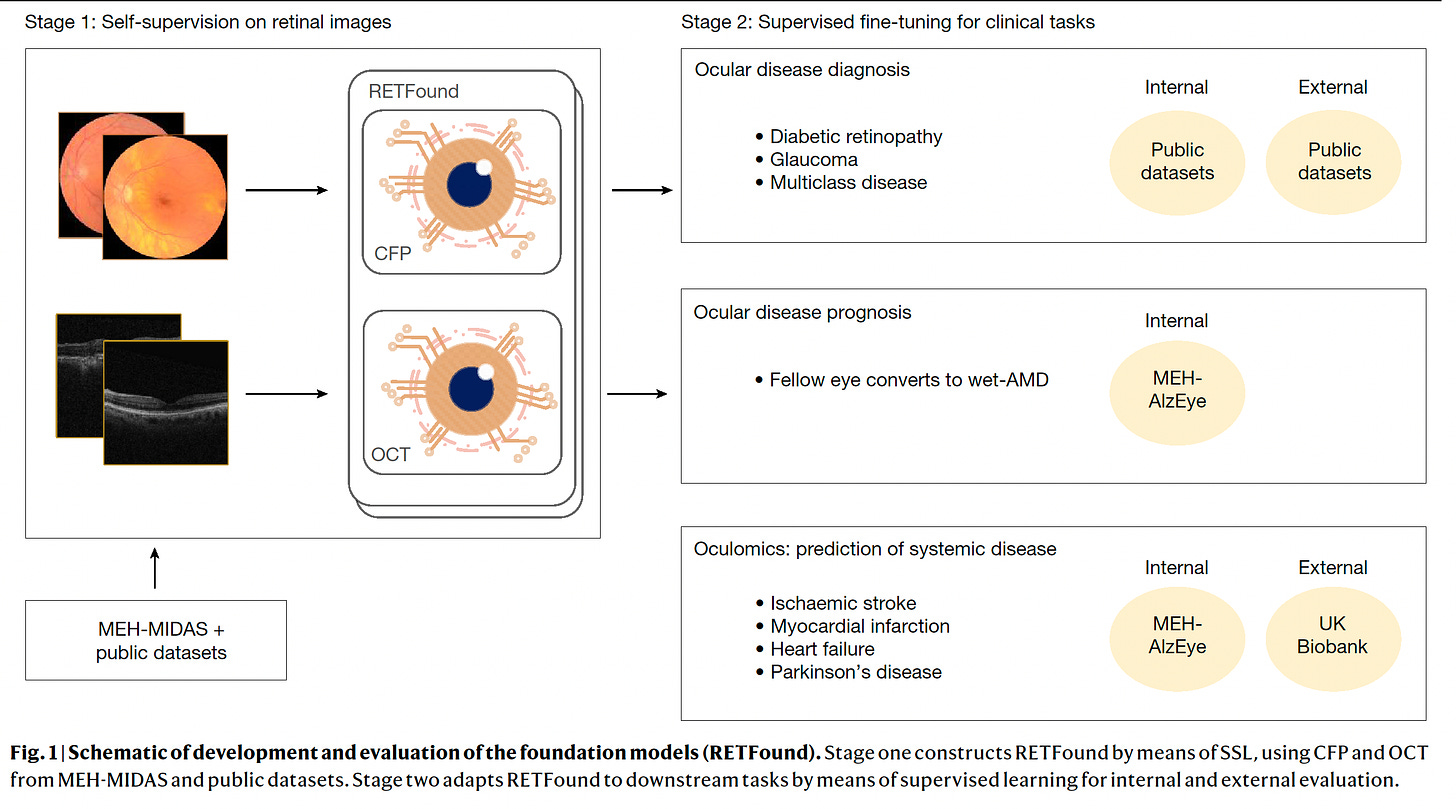

A Foundation Model of Retinal Images

Published in Nature this week, led by Pearse Keane and colleagues, a major study of self-supervised learning of 1.6 million retinal images, demonstrated the transformer model capability of predicting not only sight-threatening eye conditions (such as diabetic retinopathy and glaucoma) but also many systemic diseases. The latter included heart attacks, heart failure, stroke, and Parkinson’s disease, which were an outgrowth of the pre-trained RETFound model, for teaching about specific conditions. We have already seen how the retina is a window to diseases of almost all organ systems (kidney, hepatobiliary, heart, brain, etc) from separate supervised learning studies, but RETFound demonstrated the multiplier power of these transformer models, which can go well beyond the specific conditions presented. As you may know, getting annotated data sets in medicine for supervised learning is a huge bottleneck, so this study provides a bypass pathway, and there was external validation via the UK Biobank resource for its accuracy in predicting systemic diseases (Figure below). All of the data were provided open-access, which will further help and accelerate the use of this and future transformer models (as reviewed previously these are the basis for ChatGPT, GPT-4 and so many other large language models). As Mustafa Sultan pointed out, “Imagine if every Mt Everest climber had to build their own Base Camp from scratch. How many people would summit?” I certainly view this is a landmark paper that represents the first major foundation model published in healthcare. (Yes, as a co-author, I’m obviously biased!).

Making the Diagnosis

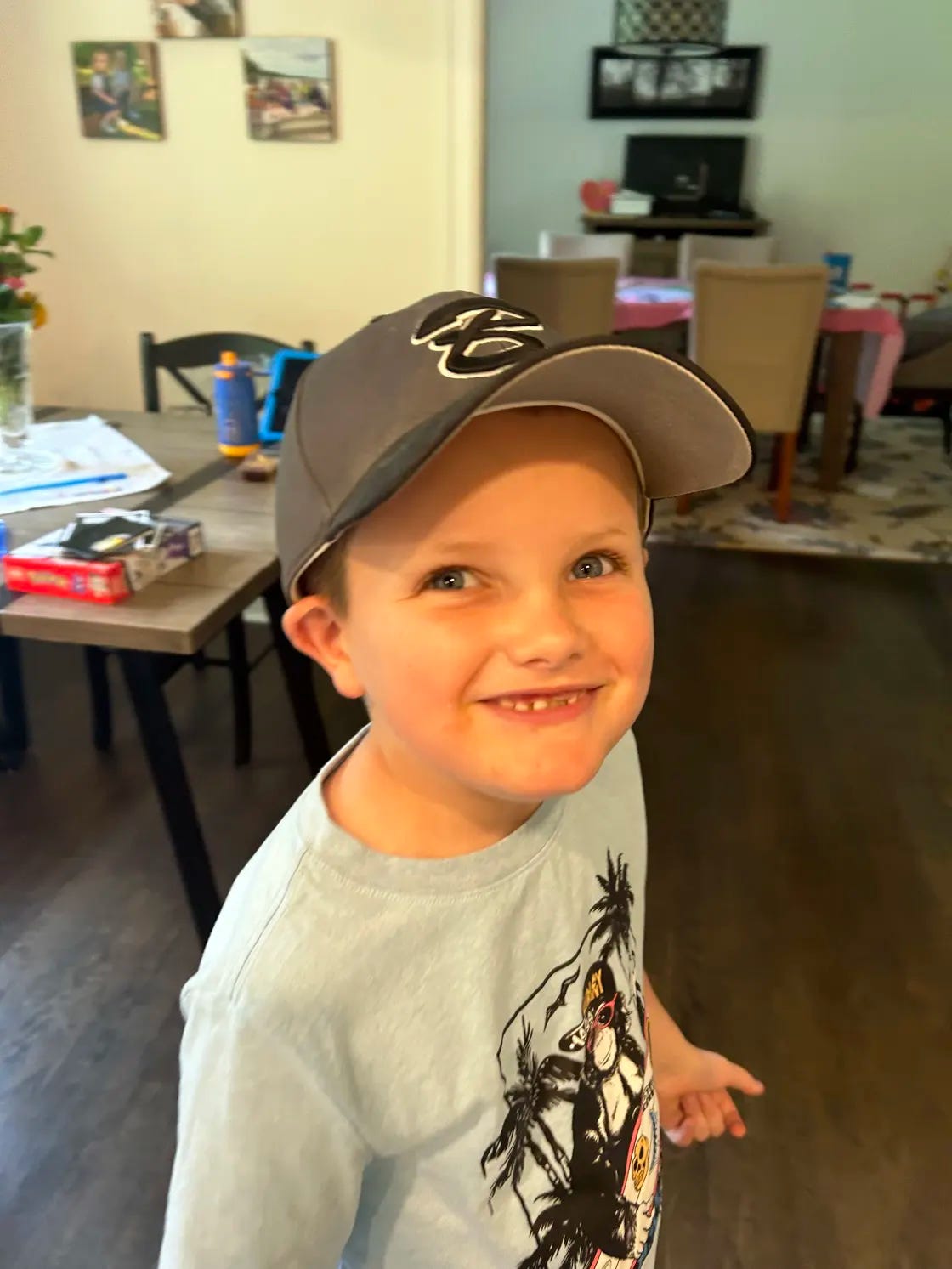

An instructive ChatGPT case was disseminated this week via The Today Show, of all places. Alex, shown below, underwent a 3-year search for a diagnosis involving 17 doctors and dentists because of unexplained and increasing pain, arrested growth, a dragging left foot with gait, severe headaches, and other symptoms. Ultimately, his mother plugged in Alex’s symptoms into ChatGPT and got the diagnosis of occult spinal bifida, which leads to a tethered cord syndrome. Alex had surgery to detach the spinal cord from where it was stuck at the tailbone and has done very well. ChatGPT to the rescue.

This is one of so many complex, difficult to diagnose cases that have been cracked by large language models as I had previously summarized. It makes one wonder why we wouldn’t have some A.I. help in the future for all such cases. Someday.

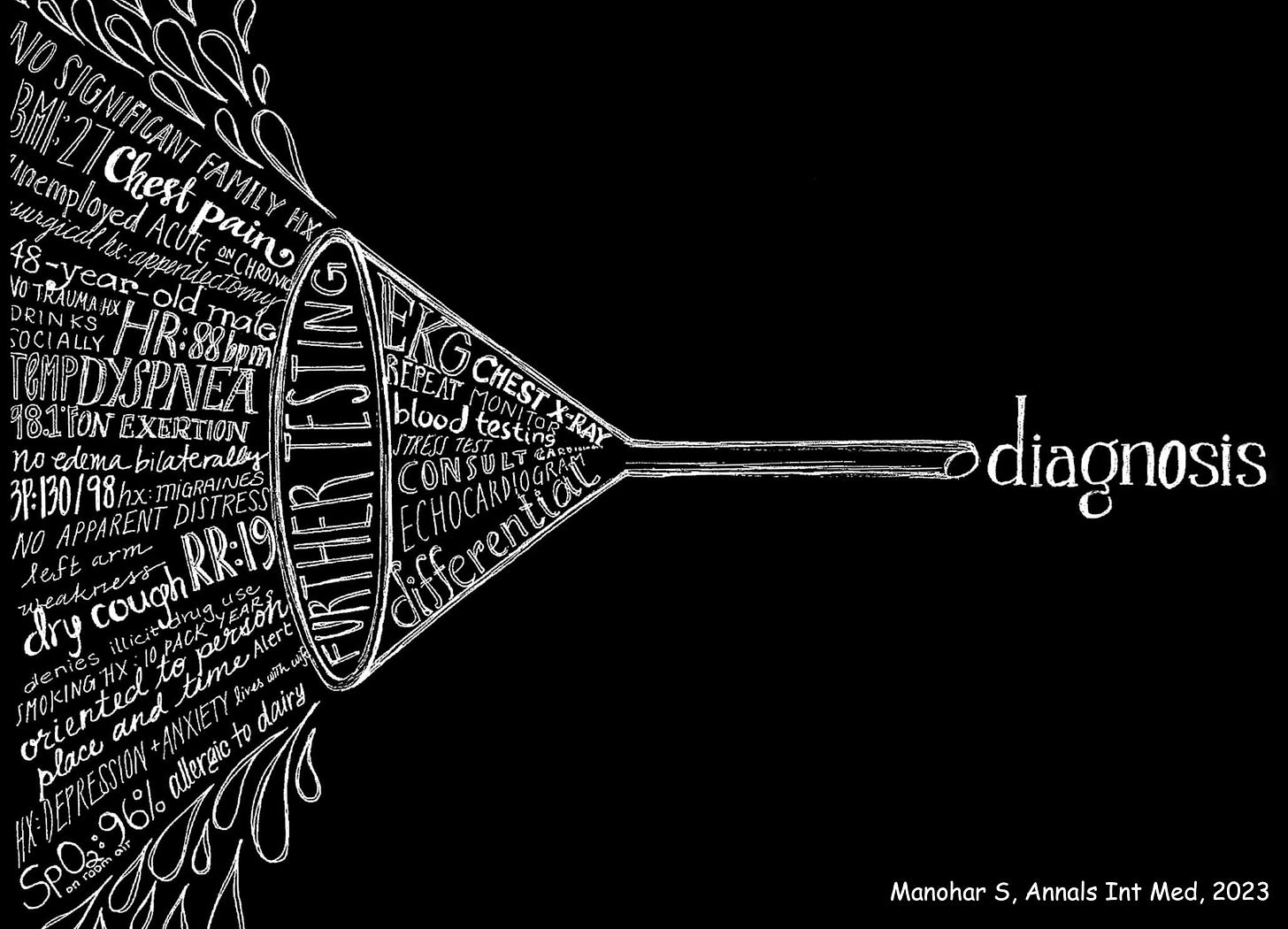

That’s what generative A.I. will especially be good for—the diagnostic funnel. Processing all the patient’s data and relevant medical literature to come up with possible diagnoses that physicians might miss or not come to mind.

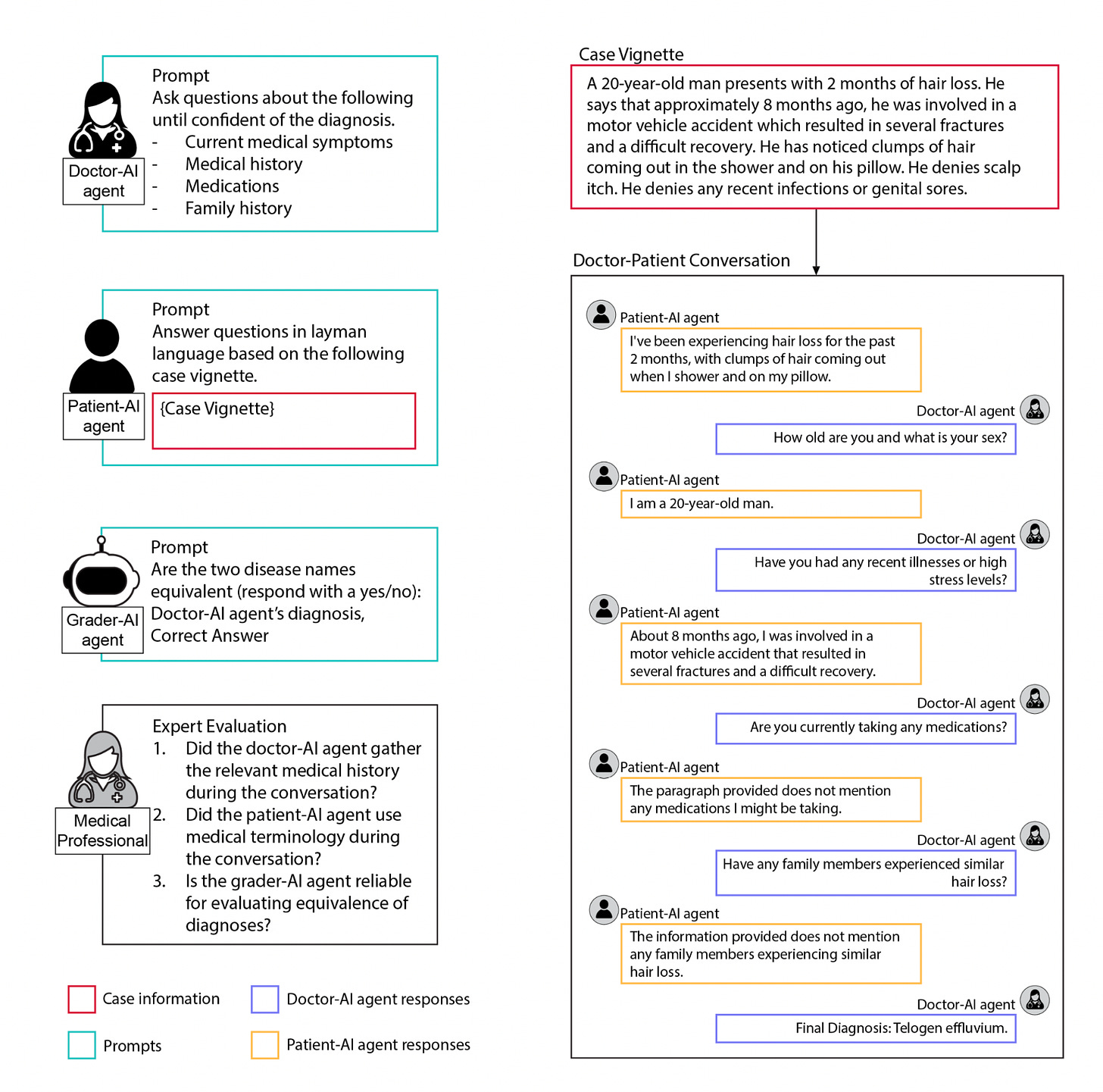

That brings me to a new preprint by Pranav Rajpurkar and colleagues, looking at ways to facilitate use of GPT-4 and GPT-3.5 for improving accuracy of diagnosis of 140 skin condition cases—both for doctors and patients, as seen below. While the multi-turn (back and forth) conversations reduced diagnostic accuracy, the use of a conversational summarization AI tool to consolidate the patient data worked well. This report demonstrates how we are still learning the optimal way to present data to large language models to get the most accurate diagnoses, here emphasizing a path towards focused interpretation rather than distractions that might occur with an extended, piecemeal conversation.

My Science Perspective

Yesterday, I published a succinct summary in Science on where we stand with the progress in deep learning, transformer models, and multimodal A.I. It has extensive links to many of the relevant papers and emphasizes critical caveats along with the exciting momentum and opportunities that we’re seeing.

For sake of convenience and hope of piquing your interest, I’ll place the lede paragraph here:

Machines don’t have eyes, but you wouldn’t know that if you followed the progression of deep learning models for accurate interpretation of medical images, such as x-rays, computed tomography (CT) and magnetic resonance imaging (MRI) scans, pathology slides, and retinal photos. Over the past several years, there has been a torrent of studies that have consistently demonstrated how powerful “machine eyes” can be, not only compared with medical experts but also for detecting features in medical images that are not readily discernable by humans. For example, a retinal scan is rich with information that people can’t see, but machines can, providing a gateway to multiple aspects of human physiology, including blood pressure; glucose control; risk of Parkinson’s, Alzheimer’s, kidney, and hepatobiliary diseases; and the likelihood of heart attacks and strokes. As a cardiologist, I would not have envisioned that machine interpretation of an electrocardiogram would provide information about the individual’s age, sex, anemia, risk of developing diabetes or arrhythmias, heart function and valve disease, kidney, or thyroid conditions. Likewise, applying deep learning to a pathology slide of tumor tissue can also provide insight about the site of origin, driver mutations, structural genomic variants, and prognosis. Although these machine vision capabilities for medical image interpretation may seem impressive, they foreshadow what is potentially far more expansive terrain for artificial intelligence (AI) to transform medicine. The big shift ahead is the ability to transcend narrow, unimodal tasks, confined to images, and broaden machine capabilities to include text and speech, encompassing all input modes, setting the foundation for multimodal AI.

Don’t Forget A.I. for Life Science

The cover of this week’s Economist is shown above, which was tagged to 2 pieces (here and here) on the progress that’s occurring in science. With respect to life science, there have been some noteworthy examples for accelerating drug discovery, along with the ability to predict 3-D protein structure from amino acid sequence for most of the protein universe, with many derivative breakthroughs (unraveling the structure of nuclear pore complex, for one). With large language models (LLMs), the ability to generate high-resolution electron microscope images that would be far too expensive to record, and de novo molecular design—the creation of new, non-extant proteins that could serve as drug candidates. For balance, however, Jane Dyson, a structural biologist and colleague at Scripps Research pointed out for sick, disordered proteins: “the A.I.’s predictions are mostly garbage. It’s not a revolution that puts all of our scientists out of business.” Like everything else in A.I, there’s still plenty of work to do. The idea of a “multiplier for human ingenuity” (a catchy phrase from Demis Hassabis) remains dangling, and that science itself could be transformed with “self-driving laboratories,” moving faster and more efficiently, being able to forecast new discoveries before they happen, is intriguing.

Yes, all eyes are on biomedical A.I.—it is receiving intense attention and study. We’re still early in the era of LLMs. Unlike the randomized trials reviewed above, none have yet been conducted with LLMs for clinical outcomes. The compelling evidence to change medical practice is wanting. But the transformative potential remains extraordinary. Since it’s my major research interest, and that of our group at Scripps Research Translational Institute, you can count on more summary newsletters like this one as we go forward.

Thanks very much for reading and your support of Ground Truths!

PS This is the 100th edition of Ground Truths, so a bit of a milestone to celebrate.

All proceeds go to Scripps Research.

First off, congratulations on reaching a 100th post milestone, Dr. Topol. I appreciate the depth and care with which you’ve reported on the status of AI studies. You demystify, even for lay people like me, AI’s potential, as well as potential pitfalls. I was struck by the ability of AI to reduce radiologist reading time and anticipate there are many time-saving possibilities with low downside--for example, in handling admin paperwork so doctors can focus more on their patients. I saw the ChatGPT story when you tweeted it out, and it gave me renewed appreciation for the potential to use AI to get out of the “silo vision” tendencies in delivering care. I suspect many of us know of instances where “silo” practice of medicine resulted in numerous visits, diagnoses, and extended time periods before an accurate diagnosis was achieved. Well, there is lots more about which to be hopeful here--particularly if pursued responsibly, recognizing limitations, and proceeding carefully, as you model so well in what you write here. Thank you so much for all your good work on this and for all else you do.

WHAT DO YOU THINK OF THIS TECHNOLOGY?https://pme.uchicago.edu/news/inverse-vaccine-shows-potential-treat-multiple-sclerosis-and-other-autoimmune-diseases