Arvind Narayanan and Sayash Kapoor are well regarded computer scientists at Princeton University and have just published a book with a provocative title, AI Snake Oil. Here I’ve interviewed Sayash and challenged him on this dismal title, for which he provides solid examples of predictive AI’s failures. Then we get into the promise of generative AI.

Full videos of all Ground Truths podcasts can be seen on YouTube here. The audios are also available on Apple and Spotify.

Transcript with links to audio and external links to key publications

Eric Topol (00:06):

Hello, it's Eric Topol with Ground Truths, and I'm delighted to welcome the co-author of a new book AI SNAKE OIL and it's Sayash Kapoor who has written this book with Arvind Narayanan of Princeton. And so welcome, Sayash. It's wonderful to have you on Ground Truths.

Sayash Kapoor (00:28):

Thank you so much. It's a pleasure to be here.

Eric Topol (00:31):

Well, congratulations on this book. What's interesting is how much you've achieved at such a young age. Here you are named in TIME100 AI’s inaugural edition as one of those eminent contributors to the field. And you're currently a PhD candidate at Princeton, is that right?

Sayash Kapoor (00:54):

That's correct, yes. I work at the Center for Information Technology Policy, which is a joint program between the computer science department and the school of public and international affairs.

Eric Topol (01:05):

So before you started working on your PhD in computer science, you already were doing this stuff, I guess, right?

Sayash Kapoor (01:14):

That's right. So before I started my PhD, I used to work at Facebook as a machine learning engineer.

Eric Topol (01:20):

Yeah, well you're taking it to a more formal level here. Before I get into the book itself, what was the background? I mean you did describe it in the book why you decided to write a book, especially one that was entitled AI Snake Oil: What Artificial Intelligence Can Do, What It Can't, and How to Tell the Difference.

Background to Writing the Book

Sayash Kapoor (01:44):

Yeah, absolutely. So I think for the longest time both Arvind and I had been sort of looking at how AI works and how it doesn't work, what are cases where people are somewhat fooled by the potential for this technology and fail to apply it in meaningful ways in their life. As an engineer at Facebook, I had seen how easy it is to slip up or make mistakes when deploying machine learning and AI tools in the real world. And had also seen that, especially when it comes to research, it's really easy to make mistakes even unknowingly that inflate the accuracy of a machine learning model. So as an example, one of the first research projects I did when I started my PhD was to look at the field of political science in the subfield of civil war prediction. This is a field which tries to predict where the next civil war will happen and in order to better be prepared for civil conflict.

(02:39):

And what we found was that there were a number of papers that claimed almost perfect accuracy at predicting when a civil war will take place. At first this seemed sort of astounding. If AI can really help us predict when a civil war will start like years in advance sometimes, it could be game changing, but when we dug in, it turned out that every single one of these claims where people claim that AI was better than two decades old logistic regression models, every single one of these claims was not reproducible. And so, that sort of set the alarm bells ringing for the both of us and we sort of dug in a little bit deeper and we found that this is pervasive. So this was a pervasive issue across fields that were quickly adopting AI and machine learning. We found, I think over 300 papers and the last time I compiled this list, I think it was over 600 papers that suffer from data leakage. That is when you can sort of train on the sets that you're evaluating your models on. It's sort of like teaching to the test. And so, machine learning model seems like it does much better when you evaluate it on your data compared to how it would really work out in the real world.

Eric Topol (03:48):

Right. You say in the book, “the goal of this book is to identify AI snake oil - and to distinguish it from AI that can work well if used in the right ways.” Now I have to tell you, it's kind of a downer book if you're an AI enthusiast because there's not a whole lot of positive here. We'll get to that in a minute. But you break down the types of AI, which I'm going to challenge a bit into three discrete areas, the predictive AI, which you take a really harsh stance on, say it will never work. Then there's generative AI, obviously the large language models that took the world by storm, although they were incubating for several years when ChatGPT came along and then content moderation AI. So maybe you could tell us about your breakdown to these three different domains of AI.

Three Types of AI: Predictive, Generative, Content Moderation

Sayash Kapoor (04:49):

Absolutely. I think one of our main messages across the book is that when we are talking about AI, often what we are really interested in are deeper questions about society. And so, our breakdown of predictive, generative, and content moderation AI sort of reflects how these tools are being used in the real world today. So for predictive AI, one of the motivations for including this in the book as a separate category was that we found that it often has nothing to do with modern machine learning methods. In some cases it can be as simple as decades old linear regression tools or logistic regression tools. And yet these tools are sold under the package of AI. Advances that are being made in generative AI are sold as if they apply to predictive AI as well. Perhaps as a result, what we are seeing is across dozens of different domains, including insurance, healthcare, education, criminal justice, you name it, companies have been selling predictive AI with the promise that we can use it to replace human decision making.

(05:51):

And I think that last part is where a lot of our issues really come down to because these tools are being sold as far more than they're actually capable of. These tools are being sold as if they can enable better decision making for criminal justice. And at the same time, when people have tried to interrogate these tools, what we found is these tools essentially often work no better than random, especially when it comes to some consequential decisions such as job automation. So basically deciding who gets to be called on the next level of like a job interview or who is rejected, right as soon as they submit the CV. And so, these are very, very consequential decisions and we felt like there is a lot of snake oil in part because people don't distinguish between applications that have worked really well or where we have seen tremendous advances such as generative AI and applications where essentially we've stalled for a number of decades and these tools don't really work as claimed by the developers.

Eric Topol (06:55):

I mean the way you partition that, the snake oil, which is a tough metaphor, and you even show the ad from 1905 of snake oil in the book. You're really getting at predictive AI and how it is using old tools and selling itself as some kind of breakthrough. Before I challenge that, are we going to be able to predict things? By the way, using generative AI, not as you described, but I would like to go through a few examples of how bad this has been and since a lot of our listeners and readers are in the medical world or biomedical world, I'll try to get to those. So one of the first ones you mentioned, which I completely agree, is how prediction of Covid from the chest x-ray and there were thousands of these studies that came throughout the pandemic. Maybe you could comment about that one.

Some Flagrant Examples

Sayash Kapoor (08:04):

Absolutely. Yeah, so this is one of my favorite examples as well. So essentially Michael Roberts and his team at the University of Cambridge a year or so after the pandemic looked back at what had happened. I think at the time there were around 500 studies that they included in the sample. And they looked back to see how many of these would be useful in a clinical setting beyond just the scope of writing a research paper. And they started out by using a simple checklist to see, okay, are these tools well validated? Does the training and the testing data, is it separate? And so on. So they ran through the simple checklist and that excluded all but 60 of these studies from consideration. So apart from 60 studies, none of these other studies even passed a very, very basic criteria for being included in the analysis. Now for these 60, it turns out that if you take a guess about how many were useful, I'm pretty confident most cases would be wrong.

(09:03):

There were exactly zero studies that were useful in a clinically relevant setting. And the reasons for this, I mean in some cases the reasons were as bizarre as training a machine learning model to predict Covid where all of the positive samples of people who had Covid were from adults. But all of the negative samples of people who didn't have Covid were from children. And so, essentially claiming that the resulting classifier can predict who has Covid is bizarre because all the classifier is doing is looking at the checks history and basically predicting which x-ray belongs to a child versus an adult. And so, this is the sort of error in some cases we saw duplicates in the training and test set. So you have the same person that is being used for training the model and that it is also used for evaluating the model. So simply memorizing a given sample of x-rays would be enough to achieve a very high performance. And so, for issues like these, I think all 60 of these studies prove to be not useful in a clinically relevant setting. And I think this is sort of the type of pattern that we've seen over and over again.

Eric Topol (10:14):

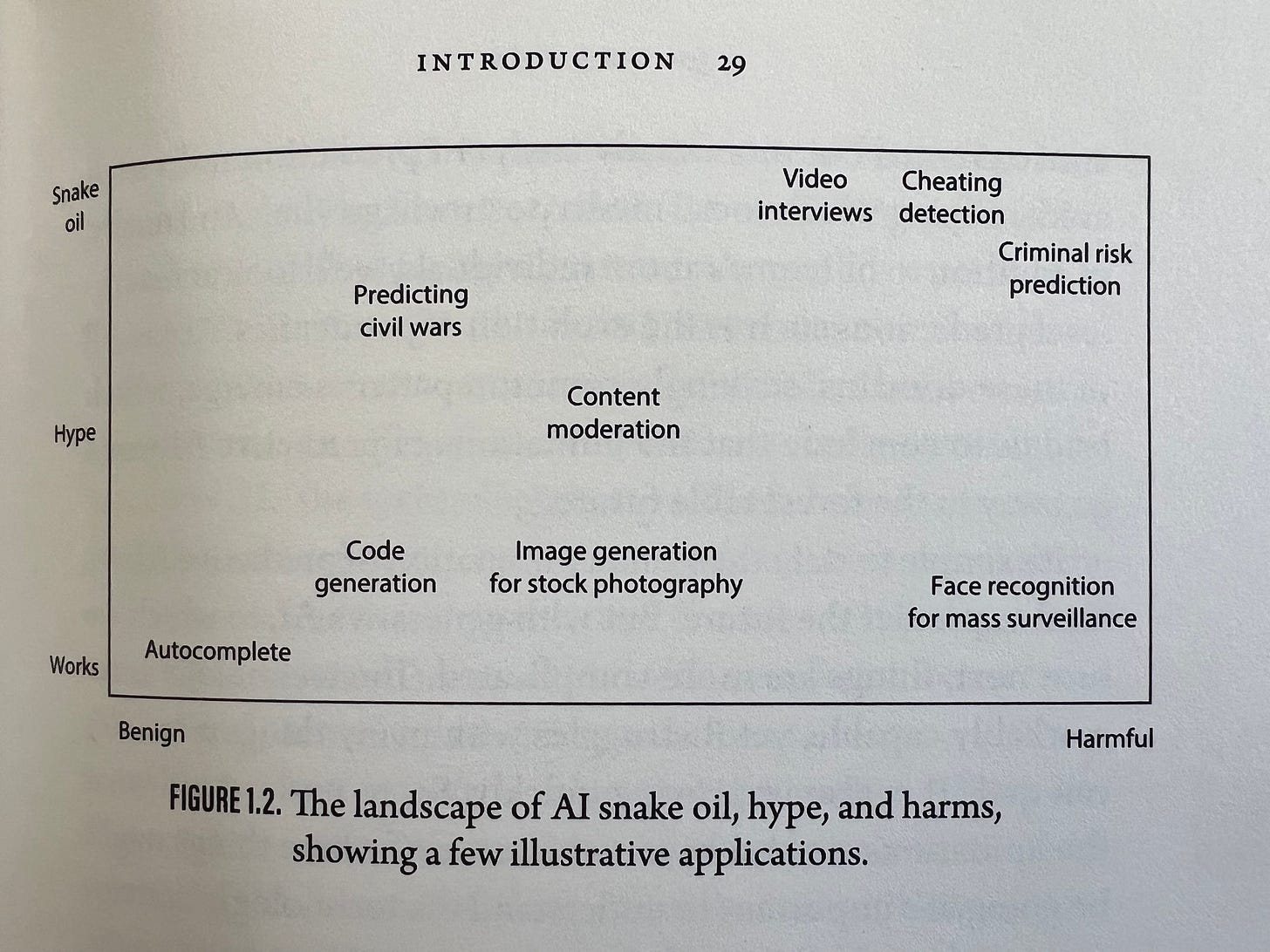

Yeah, and I agree with you on that point. I mean that was really a flagrant example and that would fulfill your title of your book, which as I said is a very tough title. But on page 29, and we'll have this in the post. You have a figure, the landscape of AI snake oil, hype, and harm.

And the problem is there is nothing good in this landscape. So on the y-axis you have works, hype, snake oil going up on the y-axis. And on the x-axis, you have benign and harmful. So the only thing you have that works and that's benign is autocomplete. I wouldn't say that works. And then you have works facial recognition for surveillance is harmful. This is a pretty sobering view of AI. Obviously, there's many things that are working that aren't on this landscape. So I just would like to challenge, are you a bit skewed here and only fixating on bad things? Because this diagram is really rough. I mean, there's so much progress in AI and you have in here you mentioned the predicting civil wars, and obviously we have these cheating detection, criminal risk prediction. I mean a lot of problems, video interviews that are deep fakes, but you don't present any good things.

Optimism on Generative AI

Sayash Kapoor (11:51):

So to be clear, I think both Arvind and are somewhat paradoxically optimistic about the future of generative AI. And so, the decision to focus on snake oil was a very intentional one from our end. So in particular, I think at various places in the book we outline why we're optimistic, what types of applications we think we're optimistic about as well. And the reason we don't focus on them is that it basically comes down to the fact that no one wants to read a book that has 300 pages about the virtues of spellcheck or AI for code generation or something like that. But I think I completely agree and acknowledge that there are lots of positive applications that didn't make the cut for the book as well. That was because we wanted people to come to this from a place of skepticism so that they're not fooled by the hype.

(12:43):

Because essentially we see even these positive uses of AI being lost out if people have unrealistic expectations from what an AI tool should do. And so, pointing out snake oil is almost a prerequisite for being able to use AI productively in your work environment. I can give a couple of examples of where or how we've sort of manifested this optimism. One is AI for coding. I think writing code is an application that I do, at least I use AI a lot. I think almost half of the code I write these days is generated, at least the first draft is generated using AI. And yet if I did not know how to program, it would be a completely different question, right? Because for me pointing out that, oh, this syntax looks incorrect or this is not handling the data in the correct way is as simple as looking at a piece of code because I've done this a few times. But if I weren't an expert on programming, it would be completely disastrous because even if the error rate is like 5%, I would have dozens of errors in my code if I'm using AI to generate it.

(13:51):

Another example of how we've been using it in our daily lives is Arvind has two little kids and he's built a number of applications for his kids using AI. So I think he's a big proponent of incorporating AI into children's lives as a force for good rather than having a completely hands-off approach. And I think both of these are just two examples, but I would say a large amount of our work these days occurs with the assistance of AI. So we are very much optimistic. And at the same time, I think one of the biggest hindrances to actually adopting AI in the real world is not understanding its limitations.

Eric Topol (14:31):

Right. Yeah, you say in the book quote, “the two of us are enthusiastic users of generative AI, both in our work and our personal lives.” It just doesn't come through as far as the examples. But before I leave the troubles of predictive AI, I liked to get into a few more examples because that's where your book shines in convincing that we got some trouble here and we need to be completely aware. So one of the most famous, well, there's a couple we're going to get into, but one I'd like to review with you, it's in the book, is the prediction of sepsis in the Epic model. So as you know very well, Epic is the most used IT and health systems electronic health records, and they launched never having published an algorithm that would tell when the patient was hospitalized if they actually had sepsis or risk of sepsis. Maybe you could take us through that, what you do in the book, and it truly was a fiasco.

The Sepsis Debacle

Sayash Kapoor (15:43):

Absolutely. So I think back in 2016/2017, Epic came up with a system that would help healthcare providers predict which patients are most at risk of sepsis. And I think, again, this is a very important problem. I think sepsis is one of the leading causes of death worldwide and even in the US. And so, if we could fix that, I think it would be a game changer. The problem was that there were no external validations of this algorithm for the next four years. So for four years, between 2017 to 2021, the algorithm wasn't used by hundreds of hospitals in the US. And in 2021, a team from University of Michigan did this study in their own hospital to see what the efficacy of the sepsis prediction model is. They found out that Epic had claimed an AUC of between 0.76 and 0.83, and the actual AUC was closer to 0.6, and AUC of 0.5 is making guesses at random.

(16:42):

So this was much, much worse than the company's claims. And I think even after that, it still took a year for sepsis to roll back this algorithm. So at first, Epic's claims were that this model works well and that's why hospitals are adopting it. But then it turned out that Epic was actually incentivizing hospitals to adopt sepsis prediction models. I think they were giving credits of hundreds of thousands of dollars in some cases. If a hospital satisfied a certain set of conditions, one of these conditions was using a sepsis prediction model. And so, we couldn't really take their claims at face value. And finally in October 2022, Epic essentially rolled back this algorithm. So they went from this one size fits all sepsis prediction model to a model that each hospital has to train on its own data, an approach which I think is more likely to work because each hospital's data is different. But it's also more time consuming and expensive for the hospitals because all of a sudden you now need your own data analysts to be able to roll out this model to be able to monitor it.

(17:47):

I think this study also highlights many of the more general issues with predictive AI. These tools are often sold as if they're replacements for an existing system, but then when things go bad, essentially they're replaced with tools that do far less. And companies often go back to the fine print saying that, oh, we should always deploy it with the human in the loop, or oh, it needs to have these extra protections that are not our responsibility, by the way. And I think that gap between what developers claim and how the tool actually works is what is most problematic.

Eric Topol (18:21):

Yeah, no, I mean it's an egregious example, and again, it fulfills like what we discussed with statistics, but even worse because it was marketed and it was incentivized financially and there's no doubt that some patients were completely miscategorized and potentially hurt. The other one, that's a classic example that went south is the Optum UnitedHealth algorithm. Maybe you could take us through that one as well, because that is yet another just horrible case of how people were discriminated against.

The Infamous Optum Algorithm

Sayash Kapoor (18:59):

Absolutely. So Optum, another health tech company created an algorithm to prioritize high risk patients for preemptive care. So I think it was around when Obamacare was being introduced that insurance networks started looking into how they could reduce costs. And one of the main ways they identified to reduce costs is basically preemptively caring for patients who are extremely high risk. So in this case, they decided to keep 3% of the patients in the high risk category and they built a classifier to decide who's the highest risk, because potentially once you have these patients, you can proactively treat them. There might be fewer emergency room visits, there might be fewer hospitalizations and so on. So that's all fine and good. But what happened when they implemented the algorithm was that every machine learning model needs like the target variable, what is being predicted at the end of the day. What they decided to predict was how much patient would pay, how much would they charge, what cost the hospital would incur if they admitted this patient.

(20:07):

And they essentially use that to predict who should be prioritized for healthcare. Now unsurprisingly, it turned out that white patients often pay a lot more or are able to pay a lot more when it comes to hospital visits. Maybe it's because of better insurance or better conditions at work that allow them to take leave and so on. But whatever the mechanism is, what ended up happening with this algorithm was I think black patients with the same level of healthcare prognosis were half as likely or about much less likely compared to white ones of getting enrolled in this high risk program. So they were much less likely to get this proactive care. And this was a fantastic study by Obermeyer, et al. It was published in Science in 2019. Now, what I think is the most disappointing part of this is that Optum did not stop using this algorithm after this study was released. And that was because in some sense the algorithm was working precisely as expected. It was an algorithm that was meant to lower healthcare costs. It wasn't an algorithm that was meant to provide better care for patients who need it most. And so, even after this study was rolled out, I think Optum continued using this algorithm as is. And I think as far as I know, even today this is or some version of this algorithm is still in use across the network of hospitals that Optum serves.

Eric Topol (21:31):

No, it's horrible the fact that it was exposed by Ziad Obermeyer’s paper in Science and that nothing has been done to change it, it's extraordinary. I mean, it's just hard to imagine. Now you do summarize the five reasons predictive AI fails in a nice table, we'll put that up on the post as well. And I think you've kind of reviewed that as these case examples. So now I get to challenge you about predictive AI because I don't know that such a fine line between that and generative AI are large language models. So as you know, the group at DeepMind and now others have done weather forecasting with multimodal large language models and have come up with some of the most accurate weather forecasting we've ever seen. And I've written a piece in Science about medical forecasting. Again, taking all the layers of a person's data and trying to predict if they're high risk for a particular condition, including not just their electronic record, but their genomics, proteomics, their scans and labs and on and on and on exposures, environmental.

Multimodal A.I. in Medicine

(22:44):

So I want to get your sense about that because this is now a coalescence of where you took down predictive AI for good reasons, and then now these much more sophisticated models that are integrating not just large data sets, but truly multimodal. Now, some people think multimodal means only text, audio, speech and video images, but here we're talking about multimodal layers of data as for the weather forecasting model or earthquake prediction or other things. So let's get your views on that because they weren't really presented in the book. I think they're a positive step, but I want to see what you think.

Sayash Kapoor (23:37):

No, absolutely. I think maybe the two questions are sort of slightly separate in my view. So for things like weather forecasting, I think weather forecasting is a problem that's extremely tenable for generative AI or for making predictions about the future. And I think one of the key differences there is that we don't have the problem of feedback loops with humans. We are not making predictions about individual human beings. We are rather making predictions about what happens with geological outcomes. We have good differential equations that we've used to predict them in the past, and those are already pretty good. But I do think deep learning has taken us one step further. So in that sense, I think that's an extremely good example of what doesn't really fit within the context of the chapter because we are thinking about decisions thinking about individual human beings. And you rightly point out that that's not really covered within the chapter.

(24:36):

For the second part about incorporating multimodal data, genomics data, everything about an individual, I think that approach is promising. What I will say though is that so far we haven't seen it used for making individual decisions and especially consequential decisions about human beings because oftentimes what ends up happening is we can make very good predictions. That's not in question at all. But even with these good predictions about what will happen to a person, sometimes intervening on the decision is hard because oftentimes we treat prediction as a problem of correlations, but making decisions is a problem of causal estimation. And that's where those two sort of approaches disentangle a little bit. So one of my examples, favorite examples of this is this model that was used to predict who should be released before screening when someone comes in with symptoms of pneumonia. So let's say a patient comes in with symptoms of pneumonia, should you release them on the day of?

(25:39):

Should you keep them in the hospital or should you transfer them to the ICU? And these ML researchers were basically trying to solve this problem. They found out that the neural network model they developed, this was two decades ago, by the way. The neural network model they developed was extremely accurate at predicting who would basically have a high risk of having complications once they get pneumonia. But it turned out that the model was saying essentially that anyone who comes in who has asthma and who comes in with symptoms of pneumonia is the lowest risk patient. Now, why was this? This was because when in the past training data, when some such patients would come into the hospital, these patients would be transferred directly to the ICU because the healthcare professionals realized that could be a serious condition. And so, it turned out that actually patients who had asthma who came in with symptoms of pneumonia were actually the lowest risk amongst the population because they were taken such good care of.

(26:38):

But now if you use this prediction that a patient comes in with symptoms of pneumonia and they have asthma, and so they're low risk, if you use this to make a decision to send them back home, that could be catastrophic. And I think that's the danger with using predictive models to make decisions about people. Now, again, I think the scope and consequences of decisions also vary. So you could think of using this to surface interesting patterns in the data, especially at a slightly larger statistical level to see how certain subpopulations behave or how certain groups of people are likely to develop symptoms or whatever. But I think when as soon as it comes to making decisions about people, the paradigm of problem solving changes because as long as we are using correlational models, I think it's very hard to say what will happen if we change the conditions, what will happen if the decision making mechanism is very different from one where the data was collected.

Eric Topol (27:37):

Right. No, I mean where we agree on this is that at the individual level, using multimodal AI with all these layers of data that have now recently become available or should be available, that has to be compared ideally in a randomized trial with standard of care today, which doesn't use any of that. And to see whether or not that decision's made, does it change the natural history and is it an advantage, that's yet to be done. And I agree, it's a very promising pathway for the future. Now, I think you have done what is a very comprehensive sweep on the predictive AI failures. You've mentioned here in our discussion, your enthusiasm and in the book about generative AI positive features and hope and excitement perhaps even. You didn't really yet, we haven't discussed much on the content moderation AI that you have discreetly categorized. Maybe you could just give us the skinny on your sense of that.

Content Moderation AI

Sayash Kapoor (28:46):

Absolutely. So content moderation AI is AI that's used to sort of clean up social media feeds. Social media platforms have a number of policies about what's allowed and not allowed on the platforms. Simple things such as spam are obviously not allowed because let's say people start spamming the platform, it becomes useless for everyone. But then there are other things like hate speech or nudity or pornography and things like that, which are also disallowed on most if not all social media platforms today. And I think a lot of the ways in which these policies are enforced today is using AI. So you might have an AI model that runs every single time you upload a photo to Facebook, for instance. And not just one perhaps hundreds of such models to detect if it has nudity or hate speech or any of these other things that might violate the platform's terms of service.

(29:40):

So content moderation AI is AI that's used to make these decisions. And very often in the last few years we've seen that when something gets taken down, for instance, Facebook deletes a post, people often blame the AI for having a poor understanding. Let's say of satire or not understanding what's in the image to basically say that their post was taken down because of bad AI. Now, there have been many claims that content moderation AI will solve social media's problems. In particular, we've heard claims from Mark Zuckerberg who in a senate testimony I think back in 2018, said that AI is going to solve most if not all of their content moderation problems. So our take on content moderation AI is basically this. AI is very, very useful for solving the simple parts of content moderation. What is a simple part? So basically the simple parts of content moderation are, let's say you have a large training data of the same type of policy violation on a platform like Facebook.

(30:44):

If you have large data sets, and if these data sets have a clear line in the sand, for instance, with nudity or pornography, it's very easy to create classifiers that will automate this. On the other hand, the hard part of content moderation is not actually just creating these AI models. The hard part is drawing the line. So when it comes to what is allowed and not allowed on platforms, these platforms are essentially making decisions about speech. And that is a topic that's extremely fraught. It's fraught in the US, it's also fraught globally. And essentially these platforms are trying to solve this really hard problem at scale. So they're trying to come up with rules that apply to every single user of the platform, like over 3 billion users in the case of Facebook. And this inevitably has these trade-offs about what speech is allowed versus disallowed that are hard to say one way or the other.

(31:42):

They're not black and white. And what we think is that AI has no place in this hard part of content moderation, which is essentially human. It's essentially about adjudicating between competing interests. And so, when people claim that AI will solve these many problems of content moderation, I think what they're often missing is that there's this extremely large number of things you need to do to get content moderation right. AI solves one of these dozen or so things, which is detecting and taking down content automatically, but all of the rest of it involves essentially human decisions. And so, this is sort of the brief gist of it. There are also other problems. For example, AI doesn't really work so well for low resource languages. It doesn't really work so well when it comes to nuances and so on that we discussed in the book. But we think some of these challenges are solvable in the medium to long term. But these questions around competing interests of power, I think are beyond the domain of AI even in the medium to long term.

Age 28! and Career Advice

Eric Topol (32:50):

No, I think you nailed that. I think this is an area that you've really aptly characterized and shown the shortcomings of AI and how the human factor is so critically important. So what's extraordinary here is you're just 28 and you are rocking it here with publications all over the place on reproducibility, transparency, evaluating generative AI, AI safety. You have a website on AI snake oil that you're collecting more things, writing more things, and of course you have the experience of having worked in the IT world with Facebook and also I guess also Columbia. So you're kind of off to the races here as one of the really young leaders in the field. And I am struck by that, and maybe you could comment about the inspiration you might provide to other young people. You're the youngest person I've interviewed for Ground Truths, by the way, by a pretty substantial margin, I would say. And this is a field where it attracts so many young people. So maybe you could just talk a bit about your career path and your advice for people. They may be the kids of some of our listeners, but they also may be some of the people listening as well.

Sayash Kapoor (34:16):

Absolutely. First, thank you so much for the kind words. I think a lot of this work is with collaborators without whom of course, I would never be able to do this. I think Arvind is a great co-author and supporter. I think in terms of my career parts, it was sort of like a zigzag, I would say. It wasn't clear to me when I was an undergrad if I wanted to do grad school or go into the industry, and I sort of on a whim went to work at Facebook, and it was because I'd been working on machine learning for a little bit of time, and I just thought, it's worth seeing what the other side has to offer beyond academia. And I think that experience was very, very helpful. One of the things, I talked to a lot of undergrads here at Princeton, and one of the things I've seen people be very concerned about is, what is the grad school they're going to get into right after undergrad?

(35:04):

And I think it's not really a question you need to answer now. I mean, in some cases I would say it's even very helpful to have a few years of industry experience before getting into grad school. That has definitely, at least that has been my experience. Beyond that, I think working in a field like AI, I think it's very easy to be caught up with all of the new things that are happening each day. So I'm not sure if you know, but AI has I think over 500-1,000 new archive papers every single day. And with this rush, I think there's this expectation that you might put on yourself on being successful requires a certain number of publications or a certain threshold of things. And I think more often than not, that is counterproductive. So it has been very helpful for me, for example, to have collaborators who are thinking long term, so this book, for instance, is not something that would be very valued within the CS community, I would say. I think the CS community values peer-reviewed papers a lot more than they do books, and yet we chose to write it because I think the staying power of a book or the longevity of a book is much more than any single paper could do. So the other concrete thing I found very helpful is optimizing for a different metric compared to what the rest of the community seems to be doing, especially when it comes to fast moving fields like AI.

Eric Topol (36:29):

Well, that last piece of advice is important because I think too often people, whether it's computer scientists, life scientists, whoever, they don't realize that their audience is much broader. And that reaching the public with things like a book or op-eds or essays, varied ways that are intended for public consumption, not for, in this case, computer scientists. So that's why I think the book is a nice contribution. I don't like the title because it's so skewed. And also the content is really trying to hammer it at home. I hope you write a sequel book on the positive sides of AI. I did want to ask you, when I read the book, I thought I heard your voice. I thought you had written the book, and Arvind maybe did some editing. You wrote about Arvind this and Arvind that. Did you write the first draft of the book and then he kind of came along?

Sayash Kapoor (37:28):

No, absolutely not. So the way we wrote the book was we basically started writing it in parallel, and I wrote the first draft of half the chapters and he wrote the first draft of the other half, and that was essentially all the way through. So we would sort of write a draft, pass it to the other person, and then keep doing this until we sent it to our publishers.

Eric Topol (37:51):

Okay. So I guess I was thinking of the chapters you wrote where it came through. I'm glad that it was a shared piece of work because that's good, because that’s what co-authoring is all about, right? Well, Sayash, it's really been a joy to meet you and congratulations on this book. I obviously have expressed my objections and my disagreements, but that's okay because this book will feed the skeptics of AI. They'll love this. And I hope that the positive side, which I think is under expressed, will not be lost and that you'll continue to work on this and be a conscience. You may know I've interviewed a few other people in the AI space that are similarly like you, trying to assure its safety, its transparency, the ethical issues. And I think we need folks like you. I mean, this is what helps get it on track, keeping it from getting off the rails or what it shouldn't be doing. So keep up the great work and thanks so much for joining.

Sayash Kapoor (39:09):

Thank you so much. It was a real pleasure.

************************************************

Thanks for listening, reading or watching!

The Ground Truths newsletters and podcasts are all free, open-access, without ads.

Please share this post/podcast with your friends and network if you found it informative!

Voluntary paid subscriptions all go to support Scripps Research. Many thanks for that—they greatly helped fund our summer internship programs for 2023 and 2024.

Thanks to my producer Jessica Nguyen and Sinjun Balabanoff for audio and video support at Scripps Research.

Note: you can select preferences to receive emails about newsletters, podcasts, or all I don’t want to bother you with an email for content that you’re not interested in.

Share this post